Of course! Python is one of the most popular and powerful languages for web scraping. Its rich ecosystem of libraries makes it accessible for beginners while still being powerful enough for complex projects.

Let's break down web scraping in Python from the basics to a complete, practical example.

What is Web Scraping?

Web scraping is the process of automatically extracting data from websites. Instead of manually copying and pasting information, you write a script that does it for you.

Analogy: Think of a website as a giant library. Web scraping is like sending a robot to the library to find specific books (web pages), open them, read the content (HTML), and copy down the exact information you need (like titles, prices, or text) into a structured file (like a CSV or Excel sheet).

Core Concepts You Need to Understand

-

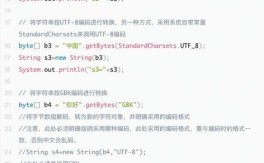

HTML (HyperText Markup Language): This is the skeleton of every webpage. Your web scraping script will essentially be "reading" the HTML to find the data you want. You don't need to be an expert, but you should understand the basic structure of tags, elements, attributes (like

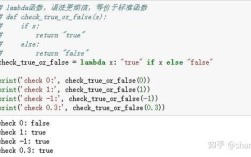

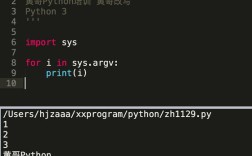

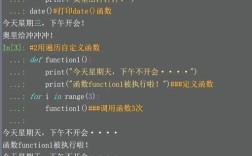

classandid), and hierarchy. (图片来源网络,侵删)

(图片来源网络,侵删) -

HTTP Requests: To get the HTML of a webpage, your script needs to make a request to the website's server, just like your browser does. The most common type of request is a

GETrequest, which asks the server to send back the data for a specific page. -

Parsing: Once you have the HTML as a large block of text, you need a way to navigate it and extract the specific pieces of information. This is called parsing. Special libraries turn the messy HTML into a structured object that you can easily search.

-

Handling Dynamic Content (JavaScript): Some modern websites load their content using JavaScript after the initial page has loaded. For these sites, simple request-based scrapers won't work. You need a tool that can act like a real browser, running JavaScript before it scrapes the content.

The Essential Python Libraries for Scraping

Here are the key players you'll use:

| Library | What it Does | When to Use It |

|---|---|---|

requests |

Makes HTTP requests (like a browser) to get the HTML of a webpage. | Always. This is your first step for any scraping task. |

Beautiful Soup |

Parses HTML and XML documents, making it easy to search and navigate the "soup" of tags. | Always. It works with the output of requests to extract data. |

Selenium |

Automates a real web browser (like Chrome or Firefox). It can click buttons, fill forms, and wait for JavaScript to load. | When a website's content is loaded dynamically with JavaScript. |

Scrapy |

A full-fledged scraping framework. It's more complex but powerful for building large, scalable spiders that can handle multiple pages and follow links. | For large, professional projects where you need speed, data pipelines, and more control. |

For this guide, we'll focus on the requests + Beautiful Soup combination, as it's the best starting point for 80% of scraping tasks.

Step-by-Step Scraping Tutorial: Scraping Book Titles

Let's scrape a list of book titles from a website designed for scraping practice: http://books.toscrape.com/.

Step 1: Setup

First, you need to install the necessary libraries. Open your terminal or command prompt and run:

pip install requests pip install beautifulsoup4 pip install lxml # A fast and efficient parser that Beautiful Soup can use

Step 2: Inspect the Target Website

Before writing any code, you need to understand the structure of the HTML.

- Go to http://books.toscrape.com/ in your browser.

- Right-click on a book title and select "Inspect" or "Inspect Element". This will open the Developer Tools.

You'll see that each book is contained within an <article> tag with the class product_pod. The title itself is inside an <h3> tag, within an <a> tag. The title attribute of the <a> tag contains the book's title.

Our Goal: Find all <article class="product_pod"> elements, then within each, find the <a> tag and get its title attribute.

Step 3: Write the Python Script

Let's build the script piece by piece.

# 1. Import necessary libraries

import requests

from bs4 import BeautifulSoup

# 2. Define the URL of the page to scrape

URL = "http://books.toscrape.com/"

# 3. Send an HTTP GET request to the URL

try:

response = requests.get(URL)

# Raise an exception if the request was unsuccessful (e.g., 404 Not Found)

response.raise_for_status()

except requests.exceptions.RequestException as e:

print(f"Error during requests to {URL} : {e}")

exit()

# 4. Parse the HTML content of the page with BeautifulSoup

# We use 'lxml' as the parser because it's fast and efficient.

soup = BeautifulSoup(response.text, 'lxml')

# 5. Find all the book containers

# We are looking for all <article> tags that have the class "product_pod"

books = soup.find_all('article', class_='product_pod')

# 6. Loop through the books and extract the titles

print("Found the following book titles:")

for book in books:

# Inside each book container, find the <a> tag inside the <h3> tag

# and get the 'title' attribute= book.h3.a['title']

print(title)

Step 4: Run the Script

Save the code as a Python file (e.g., scraper.py) and run it from your terminal:

python scraper.py

Expected Output:

Found the following book titles:

A Light in the Attic

Tipping the Velvet

Soumission

Sharp Objects

Sapiens: A Brief History of Humankind

The Requiem Red

The Dirty Little Secrets of Getting Your Dream Job

The Coming Woman: A Novel Based on the Life of the Infamous Feminist, Victoria Woodhull

The Boys in the Boat: Nine Americans and Their Epic Quest for Gold at the 1936 Berlin Olympics

The Black Maria

Starving Hearts (Triangular Trade Trilogy, #1)

Shakespeare's Sonnets

Three Advantages

Now You See Me (Now You See Me, #1)

Origins of the State

The Black Elfstone (The Original Shannara Trilogy, #3)

The Innocent

The New Confessions of an Economic Hit Man

...and many more titlesHandling Dynamic Websites with Selenium

What if the website uses JavaScript to load content? requests will only get the initial, empty HTML. This is where Selenium comes in.

Concept: Selenium doesn't just get HTML; it controls a web browser. It opens Chrome, navigates to the page, waits for the JavaScript to run, and then gives you the final HTML content.

Example: Scraping a Dynamic Site

Let's imagine a site where you have to click a "Load More" button to see all items.

# 1. Install Selenium and the WebDriver for your browser

# pip install selenium

# Download the ChromeDriver and make sure it's in your PATH or specify its path

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

import time

# Optional: Configure Chrome options

chrome_options = Options()

# chrome_options.add_argument("--headless") # Run in the background without opening a browser window

# 2. Set up the WebDriver (make sure chromedriver is in your PATH or provide the path)

# Example for providing a path:

# service = Service(executable_path='/path/to/your/chromedriver')

# driver = webdriver.Chrome(service=service, options=chrome_options)

# If chromedriver is in your PATH, you can just do:

driver = webdriver.Chrome(options=chrome_options)

# 3. Navigate to the dynamic website

# Let's use a different example site for this: http://quotes.toscrape/js/

# This site requires JavaScript to load quotes.

driver.get("http://quotes.toscrape/js/")

# 4. Wait for the dynamic content to load

# We can use a simple sleep, but it's better to use an explicit wait

# For simplicity, we'll use time.sleep() here.

print("Waiting for dynamic content to load...")

time.sleep(5) # Wait 5 seconds for JS to execute

# 5. Get the page source after JavaScript has run

page_source = driver.page_source

# 6. Parse the source with BeautifulSoup

soup = BeautifulSoup(page_source, 'lxml')

# 7. Extract the data (quotes and authors)

quotes = soup.find_all('div', class_='quote')

for quote in quotes:

text = quote.find('span', class_='text').text

author = quote.find('small', class_='author').text

print(f'"{text}" - {author}')

# 8. Close the browser

driver.quit()

Best Practices and Ethics

Web scraping is a powerful tool, but it comes with responsibilities.

-

Check

robots.txt: Most websites have a file atwww.example.com/robots.txt. This file tells bots which parts of the site they are and are not allowed to access. Always respect it.- Example:

http://books.toscrape.com/robots.txt

- Example:

-

Read the Terms of Service (ToS): Some websites explicitly forbid scraping in their ToS. Violating this can get your IP address banned.

-

Don't Overload the Server: Be a good internet citizen. Sending too many requests in a short time can slow down or crash a website. Add delays between your requests using

time.sleep(1). -

Identify Yourself: Set a

User-Agentin yourrequestsheader. This tells the website who you are. It's more polite than using the defaultrequestsuser agent.headers = { 'User-Agent': 'My Web Scraper 1.0 (my-email@example.com)' } response = requests.get(URL, headers=headers) -

Store Data Cleanly: Don't just print data to the console. Use libraries like

pandasto store it in a CSV or Excel file.import pandas as pd # Assuming 'titles' is a list of your scraped titles df = pd.DataFrame(titles, columns=['Book Title']) df.to_csv('books.csv', index=False) print("Data saved to books.csv")