Of course! The term "Oblique Tree" in Python refers to a type of decision tree that splits the data along a hyperplane that is not necessarily parallel to the feature axes.

This is in contrast to the standard, more common "Axis-Parallel" or "Orthogonal" Tree (like the one implemented in sklearn.tree.DecisionTreeClassifier), where each split is a simple "if feature X > value Y" check, creating splits that are always parallel to the axes.

Let's break this down.

What is an Oblique Tree?

Imagine you're trying to separate two classes of data points in a 2D space.

-

Axis-Parallel Tree: Can only make cuts that are perfectly vertical or horizontal. To separate a diagonal cluster of points, it would need many small, "stair-step" splits, which is inefficient and can lead to complex, less interpretable trees.

(图片来源网络,侵删)

(图片来源网络,侵删) -

Oblique Tree: Can make a single cut at any angle. This allows it to create a single, straight line that perfectly separates the diagonal cluster in one step. This is much more powerful and efficient for certain data distributions.

Why Use Oblique Trees? (Pros and Cons)

Advantages:

- Higher Accuracy: They can model complex decision boundaries with fewer splits, leading to better generalization and accuracy, especially when the true boundary between classes is diagonal or multi-dimensional.

- More Compact Trees: An oblique tree can achieve what an axis-parallel tree does in 10 splits with just 1 or 2 splits. This results in smaller, less complex models.

- Better for High-Dimensional Data: In high-dimensional spaces, finding a single good oblique split can be more effective than searching for the best axis-aligned split.

Disadvantages:

- Computational Cost: Finding the best oblique split is much harder. Instead of searching for the best single feature and threshold (e.g.,

feature_3 > 0.5), the algorithm must search for the best combination of features and their corresponding weights (e.g.,2*feature_1 - 1.5*feature_2 + 0.7*feature_3 > 0.1). This is a much larger search space. - Interpretability: This is the biggest trade-off. An axis-parallel split is easy to understand: "If a customer's age is > 30, they are likely to buy." An oblique split is a linear combination: "If

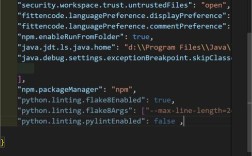

5*age - 0.2*income + 0.3*spending_score > 15, they are likely to buy." This is much harder for a human to interpret. - Implementation: They are not the default in most standard libraries (like scikit-learn) because of the computational complexity.

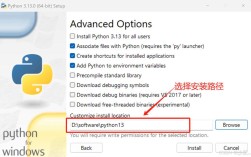

How to Implement Oblique Trees in Python

Since scikit-learn's DecisionTreeClassifier is axis-parallel, you need to use other libraries. Here are the most popular options.

Option 1: sklearn-oblique (Easiest Installation)

This library provides a scikit-learn compatible implementation. It's one of the most straightforward ways to get started.

Installation:

pip install sklearn-oblique

Example Code:

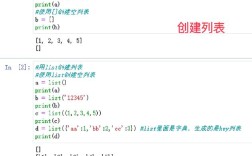

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn_oblique import ObliqueTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.inspection import DecisionBoundaryDisplay

# 1. Generate data with a diagonal decision boundary

# We set n_informative=2 to make sure the problem is 2D and solvable with a line

X, y = make_classification(

n_samples=200,

n_features=2,

n_informative=2,

n_redundant=0,

n_clusters_per_class=1,

class_sep=1.5,

random_state=42

)

# 2. Split the data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# 3. Create and train the Oblique Tree Classifier

# The 'alpha' parameter controls the sparsity of the splits (number of non-zero weights)

# A higher alpha means sparser (simpler) splits.

oblique_tree = ObliqueTreeClassifier(random_state=42, alpha=0.9)

oblique_tree.fit(X_train, y_train)

# 4. Evaluate the model

accuracy = oblique_tree.score(X_test, y_test)

print(f"Oblique Tree Accuracy: {accuracy:.4f}")

# 5. Visualize the decision boundary

fig, ax = plt.subplots(figsize=(10, 7))

disp = DecisionBoundaryDisplay.from_estimator(

oblique_tree,

X,

ax=ax,

cmap=plt.cm.coolwarm,

response_method="predict",

xlabel="Feature 1",

ylabel="Feature 2",

alpha=0.8

)

# Plot the training points

scatter = ax.scatter(X_train[:, 0], X_train[:, 1], c=y_train, edgecolors="k", cmap=plt.cm.coolwarm)

ax.set_title("Decision Boundary of an Oblique Tree")

ax.legend(handles=scatter.legend_elements()[0], labels=['Class 0', 'Class 1'])

plt.show()

Option 2: DecisionTreeClassifier with Custom Splitter (Advanced)

You can also create your own custom splitter for scikit-learn's DecisionTreeClassifier. This gives you full control but requires more work. The core idea is to override the node_split method to search for a linear combination of features instead of a single feature.

This is a simplified conceptual example. A full implementation would be much more complex.

# This is a conceptual example, not a full, working implementation.

from sklearn.tree import DecisionTreeClassifier

from sklearn.base import BaseEstimator, ClassifierMixin

class CustomObliqueSplitter:

def __init__(self, n_features, ...):

# ... initialization logic ...

pass

def node_split(self, X, y, sample_weight):

# 1. Generate random weights for a linear combination of features.

# This is a key step: we're not picking one feature, but a combo.

weights = np.random.randn(X.shape[1])

# 2. Calculate the linear combination for each sample.

# This creates a new 1D feature.

X_proj = np.dot(X, weights)

# 3. Find the best threshold for this new 1D feature.

# This is the same as a standard axis-aligned split, but on the projection.

# ... logic to find best_threshold ...

# 4. Return the split information (feature_indices, threshold, etc.)

# In this case, the "feature" is the combination defined by 'weights'.

return {"weights": weights, "threshold": best_threshold, ...}

# You would then pass this custom splitter to the DecisionTreeClassifier

# tree = DecisionTreeClassifier(splitter=CustomObliqueSplitter)

Option 3: pyobtree

Another library specifically for oblique trees. It's worth exploring if sklearn-oblique doesn't meet your needs.

Installation:

pip install pyobtree

Practical Considerations and Comparison

Let's compare the performance on a simple dataset.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_moons

from sklearn.tree import DecisionTreeClassifier

from sklearn_oblique import ObliqueTreeClassifier

from sklearn.inspection import DecisionBoundaryDisplay

# Generate a non-linear dataset (moons)

X, y = make_moons(n_samples=200, noise=0.25, random_state=42)

# --- Train Axis-Parallel Tree ---

axis_tree = DecisionTreeClassifier(random_state=42, max_depth=5)

axis_tree.fit(X, y)

# --- Train Oblique Tree ---

oblique_tree = ObliqueTreeClassifier(random_state=42, alpha=0.9)

oblique_tree.fit(X, y)

# --- Visualize and Compare ---

fig, axes = plt.subplots(1, 2, figsize=(16, 7))

# Axis-Parallel Tree

ax = axes[0]

disp1 = DecisionBoundaryDisplay.from_estimator(

axis_tree, X, ax=ax, cmap=plt.cm.coolwarm, response_method="predict"

)

ax.scatter(X[:, 0], X[:, 1], c=y, edgecolors="k", cmap=plt.cm.coolwarm)

ax.set_title("Axis-Parallel Tree (DecisionTreeClassifier)")

ax.set_xlabel("Feature 1")

ax.set_ylabel("Feature 2")

# Oblique Tree

ax = axes[1]

disp2 = DecisionBoundaryDisplay.from_estimator(

oblique_tree, X, ax=ax, cmap=plt.cm.coolwarm, response_method="predict"

)

ax.scatter(X[:, 0], X[:, 1], c=y, edgecolors="k", cmap=plt.cm.coolwarm)

ax.set_title("Oblique Tree (ObliqueTreeClassifier)")

ax.set_xlabel("Feature 1")

ax.set_ylabel("Feature 2")

plt.tight_layout()

plt.show()

Expected Output: You will likely see that the Oblique Tree creates a smoother, more natural-looking boundary that better fits the "moon" shape, while the Axis-Parallel Tree creates a more jagged, blocky boundary to approximate the curve. This demonstrates the Oblique Tree's ability to model more complex relationships with fewer splits.

Summary: When to Use What?

| Scenario | Recommended Tree Type | Why? |

|---|---|---|

| Need for interpretability | Axis-Parallel (Scikit-learn) | Simple "if-then" rules are easy to explain to stakeholders. |

| High-dimensional data with complex boundaries | Oblique Tree | Can find efficient, high-accuracy splits with fewer nodes. |

| Speed is critical | Axis-Parallel (Scikit-learn) | Training is much faster due to a simpler search space. |

| Standard tabular data (e.g., CSVs) | Start with Axis-Parallel | It's a strong baseline and often good enough. |

| Computer Vision / NLP | Neural Networks / SVMs | Trees (both types) are generally not the best choice for these domains. |

For most standard machine learning tasks, start with sklearn.tree.DecisionTreeClassifier. If you find that its performance is lacking and you suspect the decision boundary is complex, an Oblique Tree is an excellent next step to try, provided you are comfortable with the trade-off in interpretability and increased training time.