Of course! Working with BLOBs (Binary Large Objects) in Python's sqlite3 module is a common task for storing things like images, audio files, or serialized data directly in your database.

Here’s a comprehensive guide covering everything from the basics to best practices.

What is a BLOB?

A BLOB is a binary data type used to store large objects. In SQLite, it can store data up to 1 GB in size. This makes it perfect for:

- Images (JPEG, PNG, etc.)

- Audio/Video files

- Document files (PDF, DOCX)

- Serialized Python objects (using

pickle) - Any other binary data.

The Core Concept: bytes

In Python, you interact with BLOBs using the bytes type. The sqlite3 library handles the conversion between Python's bytes and SQLite's BLOB data type automatically.

Step-by-Step Example: Storing and Retrieving an Image

Let's walk through a complete example of storing a small image (e.g., logo.png) in a database and then reading it back to save it as a new file.

Setup

First, you need a sample image file. Let's assume you have a file named logo.png in the same directory as your Python script.

The Python Code

This script will:

- Connect to an SQLite database (it will be created if it doesn't exist).

- Create a table to store images.

- Read the

logo.pngfile into abytesobject. - Insert the image data into the database.

- Retrieve the image data from the database.

- Write the retrieved data to a new file,

retrieved_logo.png.

import sqlite3

import os

# --- Configuration ---

DB_FILE = 'image_database.db'

IMAGE_FILE = 'logo.png' # Make sure this file exists

RETRIEVED_IMAGE_FILE = 'retrieved_logo.png'

# --- 1. Connect to the database and create a table ---

# The 'with' statement ensures the connection is closed automatically.

with sqlite3.connect(DB_FILE) as conn:

cursor = conn.cursor()

# Create a table to store image data

# 'id' is a unique key for each image

# 'name' is a descriptive name

# 'data' is the BLOB column to store the binary data

cursor.execute('''

CREATE TABLE IF NOT EXISTS images (

id INTEGER PRIMARY KEY AUTOINCREMENT,

name TEXT NOT NULL,

data BLOB NOT NULL

)

''')

print("Table 'images' created or already exists.")

# --- 2. Insert an image into the database ---

print(f"\nReading '{IMAGE_FILE}' to insert into the database...")

try:

# Open the image file in binary read mode ('rb')

with open(IMAGE_FILE, 'rb') as file:

image_blob = file.read()

except FileNotFoundError:

print(f"Error: The file '{IMAGE_FILE}' was not found.")

exit()

with sqlite3.connect(DB_FILE) as conn:

cursor = conn.cursor()

# Use a parameterized query (?) to prevent SQL injection

# The 'image_blob' variable is passed as a tuple

sql_insert_query = "INSERT INTO images (name, data) VALUES (?, ?)"

data_tuple = (IMAGE_FILE, image_blob)

cursor.execute(sql_insert_query, data_tuple)

conn.commit() # Commit the transaction to save the changes

print(f"Image '{IMAGE_FILE}' inserted successfully.")

# --- 3. Retrieve an image from the database ---

print(f"\nRetrieving an image from the database...")

with sqlite3.connect(DB_FILE) as conn:

cursor = conn.cursor()

# Fetch the most recently inserted image

# Using cursor.lastrowid is a convenient way to get the ID of the last inserted row

cursor.execute("SELECT data FROM images WHERE id = ?", (cursor.lastrowid,))

# fetchone() returns a tuple, so we access the first element (the BLOB data)

record = cursor.fetchone()

if record:

retrieved_image_blob = record[0]

print(f"Image data retrieved for ID {cursor.lastrowid}.")

# Write the retrieved BLOB data to a new file

with open(RETRIEVED_IMAGE_FILE, 'wb') as file:

file.write(retrieved_image_blob)

print(f"Image saved as '{RETRIEVED_IMAGE_FILE}'.")

else:

print("No image found with that ID.")

print("\nScript finished.")

Running the Code

- Save the code above as

manage_blobs.py. - Make sure

logo.pngis in the same folder. - Run the script from your terminal:

python manage_blobs.py.

You will see output messages, and a new file named retrieved_logo.png will be created, identical to your original logo.png. You will also have a new file image_database.db in your directory.

Key Concepts and Best Practices

Use Parameterized Queries (Always!)

When inserting or querying BLOB data (or any data, for that matter), always use parameterized queries with the placeholder.

Good (Safe):

cursor.execute("INSERT INTO images (name, data) VALUES (?, ?)", (image_name, image_blob))

Bad (Vulnerable to SQL Injection):

# NEVER DO THIS!

sql = f"INSERT INTO images (name, data) VALUES ('{image_name}', '{image_blob}')"

cursor.execute(sql)

Using f-strings or formatting to insert variables directly into your SQL query is a major security risk.

File Handling Modes

- Reading a file to get BLOB data:

open('filename.png', 'rb')(read binary). - Writing BLOB data to a file:

open('newfile.png', 'wb')(write binary).

Performance and Memory Considerations

The read() method in the example loads the entire file into memory. This is fine for small files but can cause problems (MemoryError) for very large files (e.g., videos).

Solution for Large Files: Stream the Data

For large files, read and write the data in chunks. This avoids loading the whole file into memory at once.

Here's how you could modify the insertion part to stream the file:

def insert_image_streaming(conn, image_path, image_name):

"""Inserts an image into the database by streaming it in chunks."""

with open(image_path, 'rb') as file:

# The file object itself is an iterator over its content

cursor = conn.cursor()

cursor.execute("INSERT INTO images (name, data) VALUES (?, ?)", (image_name, file.read()))

conn.commit()

# You would call it like this:

# with sqlite3.connect(DB_FILE) as conn:

# insert_image_streaming(conn, 'large_video.mp4', 'my_video.mp4')

Note: While streaming the file from disk is good, cursor.execute() will still read the entire file.read() into memory to send to the database. For truly massive data, you might need more advanced database techniques, but for most use cases (under a few hundred MB), this is perfectly acceptable.

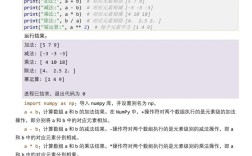

Storing Other Binary Data (e.g., with pickle)

You can store any serializable Python object as a BLOB using the pickle module.

Example: Storing a list

import pickle

data_to_store = [1, 2, 'hello', {'key': 'value'}]

# Serialize the data to bytes

pickled_data = pickle.dumps(data_to_store)

# Store the pickled bytes in the database

with sqlite3.connect(DB_FILE) as conn:

cursor = conn.cursor()

cursor.execute("INSERT INTO serialized_objects (name, data) VALUES (?, ?)", ('my_list', pickled_data))

conn.commit()

# Retrieve and deserialize the data

with sqlite3.connect(DB_FILE) as conn:

cursor = conn.cursor()

cursor.execute("SELECT data FROM serialized_objects WHERE name = 'my_list'")

record = cursor.fetchone()

if record:

retrieved_data = pickle.loads(record[0])

print(f"Retrieved data: {retrieved_data}")

print(f"Type: {type(retrieved_data)}")

Warning: Be careful with pickle. Never unpickle data from an untrusted source, as it can execute arbitrary code.

Summary

| Task | Code Snippet | Key Points |

|---|---|---|

| Connect & Create Table | with sqlite3.connect('db.db') as conn: ... cursor.execute('CREATE TABLE ... (data BLOB)') |

Use with for automatic connection closing. Define a BLOB column. |

| Read File into BLOB | with open('file.png', 'rb') as f: blob_data = f.read() |

Use 'rb' (read binary) mode. |

| Insert BLOB into DB | cursor.execute("INSERT INTO table (data) VALUES (?)", (blob_data,)) |

Always use placeholders for safety. Pass data as a tuple. |

| Retrieve BLOB from DB | cursor.execute("SELECT data FROM table WHERE id=?", (id,))record = cursor.fetchone()blob_data = record[0] |

fetchone() returns a tuple. Access the BLOB data at index 0. |

| Write BLOB to File | with open('newfile.png', 'wb') as f: f.write(retrieved_blob) |

Use 'wb' (write binary) mode. |

| Store Serialized Objects | pickle.dumps(my_object) to store, pickle.loads(blob_data) to retrieve. |

A powerful way to store complex Python objects directly in the database. |