Of course! Using pdfminer.six to convert PDFs to HTML is a common task, but it requires understanding a few key concepts because the library's primary goal is text extraction, not perfect visual replication.

Here’s a comprehensive guide covering:

- Why

pdfmineris tricky for HTML: The fundamental challenge. - Basic HTML Extraction: The simplest code to get some HTML.

- Advanced HTML Extraction: How to get structured HTML with tags (

<p>,<div>, etc.). - Handling Layouts (The "Right" Way): Using

pdf2htmlEXfor visually faithful output. - Putting It All Together: A practical, complete script.

The Fundamental Challenge: Text vs. Layout

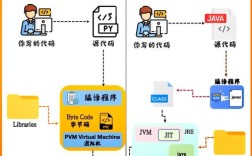

pdfminer is a PDF parser. It reads the PDF's internal structure, which is a set of instructions for drawing text, lines, and images on a page. It does not see the PDF as a document with "paragraphs," "headers," or "columns."

When you ask for HTML, pdfminer essentially does this:

- Extract all text and its exact coordinates (

x0,y0,x1,y1). - Group text that appears on the same line.

- Sort these lines based on their vertical position (

y0), from top to bottom. - Wrap each line in a

<div>tag.

The result is a "flat" HTML structure. It will lose:

- Columns: Text from the right column will be intermingled with text from the left column.

- Fonts & Styles: It won't know if text is bold, italic, or a different font size. It will just extract the text characters.

- Visual Flow: The reading order might be jumbled in complex layouts.

Moral of the story: pdfminer is excellent for getting the raw text content in an HTML-like wrapper. For visually faithful HTML, a different tool is better.

Basic HTML Extraction (The Simple Way)

This method gives you the raw text, line by line, wrapped in <div> tags. It's fast but not very useful for most documents.

First, make sure you have pdfminer.six installed:

pip install pdfminer.six

Here is the most basic code:

from pdfminer.high_level import extract_pages

from pdfminer.layout import LTTextContainer

# --- The Simplest Method (extract_text_as_html) ---

# This is the easiest way, but produces very basic HTML.

def simple_html_to_file(pdf_path, html_path):

"""Extracts text from a PDF and saves it as a simple HTML file."""

html_string = ""

# extract_pages gives us access to the layout objects

for page_layout in extract_pages(pdf_path):

for element in page_layout:

# We only care about text containers

if isinstance(element, LTTextContainer):

# get_text() with html=True adds <div> tags

html_string += element.get_text(html=True) + "\n"

# Save the result to an HTML file

with open(html_path, 'w', encoding='utf-8') as f:

f.write("<html><body>")

f.write(html_string)

f.write("</body></html>")

# --- Usage ---

input_pdf = "example.pdf"

output_html = "simple_output.html"

simple_html_to_file(input_pdf, output_html)

print(f"Simple HTML saved to {output_html}")

What simple_output.html looks like:

<html><body> <div style="position: absolute; top: 100px; left: 50px;"> This is the first line of text. </div> <div style="position: absolute; top: 120px; left: 50px;"> This is the second line. </div> ... </body></html>

Notice the position: absolute style. This is how pdfminer tries to place text, but without a parent container, it doesn't help much.

Advanced HTML Extraction (Getting Structure)

To get better structure, we need to analyze the layout ourselves. We can group lines that are close together vertically to form "paragraphs." This is a significant improvement.

The key is to iterate through the text lines, sort them, and then group them based on the vertical distance between them.

from pdfminer.high_level import extract_pages

from pdfminer.layout import LTTextContainer, LTChar

import re

def advanced_html_to_file(pdf_path, html_path):

"""Extracts text and tries to form paragraphs for better HTML structure."""

all_lines = []

# 1. Extract all text lines and their positions

for page_layout in extract_pages(pdf_path):

for element in page_layout:

if isinstance(element, LTTextContainer):

# We get the raw text without tags to analyze it

text = element.get_text()

# Get the bounding box

x0, y0, x1, y1 = element.bbox

all_lines.append({

'text': text,

'y0': y0, # Bottom y-coordinate

'y1': y1, # Top y-coordinate

'x0': x0 # Left x-coordinate

})

# 2. Sort lines by their vertical position (top to bottom)

all_lines.sort(key=lambda line: line['y1'], reverse=True)

# 3. Group lines into "paragraphs"

paragraphs = []

current_paragraph = [all_lines[0]]

# Define a threshold for what constitutes a new paragraph (in points)

# This value needs to be tuned for your specific PDFs

paragraph_gap_threshold = 5.0

for line in all_lines[1:]:

# Check if the gap between the current line and the last line in the

# current paragraph is larger than our threshold.

last_line_in_para = current_paragraph[-1]

gap = last_line_in_para['y0'] - line['y1']

if gap > paragraph_gap_threshold:

# This line is far enough away to be a new paragraph

paragraphs.append("".join([p['text'] for p in current_paragraph]))

current_paragraph = [line]

else:

# This line is part of the current paragraph

current_paragraph.append(line)

# Add the last paragraph

if current_paragraph:

paragraphs.append("".join([p['text'] for p in current_paragraph]))

# 4. Build the final HTML string

html_string = "<html><head><meta charset='UTF-8'></head><body>\n"

for para in paragraphs:

# Basic cleaning of whitespace

clean_para = re.sub(r'\s+', ' ', para).strip()

if clean_para: # Avoid adding empty paragraphs

html_string += f"<p>{clean_para}</p>\n"

html_string += "</body></html>"

# 5. Save to file

with open(html_path, 'w', encoding='utf-8') as f:

f.write(html_string)

# --- Usage ---

input_pdf = "example.pdf"

output_html = "advanced_output.html"

advanced_html_to_file(input_pdf, output_html)

print(f"Advanced HTML with paragraphs saved to {output_html}")

This advanced script produces much cleaner HTML with <p> tags, but it still struggles with columns and complex layouts.

The "Right" Way for Visually Faithful HTML: pdf2htmlEX

If your goal is to create an HTML file that looks almost identical to the original PDF, you should not use pdfminer for this job. The right tool for the job is pdf2htmlEX.

It's a separate command-line tool that does a much better job of preserving the visual layout, including columns, images, and even some fonts.

Install pdf2htmlEX:

- On macOS (using Homebrew):

brew install pdf2htmlex - On Ubuntu/Debian:

sudo apt-get install pdf2htmex - On Windows: You'll need to download the binary from the official site.

Use it from Python:

You can easily call it from a Python script using the subprocess module.

import subprocess

import os

def convert_pdf_to_html_visual(pdf_path, output_dir="."):

"""

Uses pdf2htmlEX to convert a PDF to a visually faithful HTML file.

"""

try:

# Check if pdf2htmlEX is installed

subprocess.run(["pdf2htmlEX", "--version"], check=True, capture_output=True)

except (subprocess.CalledProcessError, FileNotFoundError):

print("Error: pdf2htmlEX is not installed or not in your PATH.")

print("Please install it from: http://pdf2htmlEX.googlecode.com/")

return

# Ensure the output directory exists

os.makedirs(output_dir, exist_ok=True)

# The output HTML file will have the same name as the PDF

html_filename = os.path.splitext(os.path.basename(pdf_path))[0] + ".html"

html_path = os.path.join(output_dir, html_filename)

print(f"