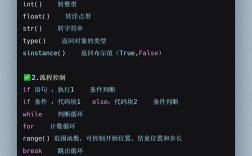

Of course! Calculating correlation in Python is a fundamental task in data analysis. It helps you understand the linear relationship between two variables.

Here's a comprehensive guide covering:

- What is Correlation? (A quick refresher)

- The Main Tools:

pandasandscipy - Step-by-Step Examples (from simple to advanced)

- Visualizing Correlation (Crucial for interpretation!)

- Important Considerations (Pitfalls to avoid)

What is Correlation? (A Quick Refresher)

Correlation measures the strength and direction of a linear relationship between two numerical variables.

- Correlation Coefficient (r): A value between -1 and +1.

- +1: Perfect positive correlation (as one variable increases, the other increases proportionally).

- -1: Perfect negative correlation (as one variable increases, the other decreases proportionally).

- 0: No linear correlation.

- Strength: The closer the value is to +1 or -1, the stronger the linear relationship. A value near 0 indicates a weak or no linear relationship.

- Direction: A positive value means the variables move in the same direction; a negative value means they move in opposite directions.

Crucial Point: Correlation does not imply causation! Just because two variables are correlated doesn't mean one causes the other.

The Main Tools in Python

You'll primarily use two libraries:

- Pandas: Excellent for working with DataFrames. Its

.corr()method is the easiest way to calculate a correlation matrix for all numeric columns in a dataset. - SciPy: Offers more statistical functions, including

pearsonr, which calculates the correlation coefficient and, importantly, the p-value. The p-value tells you if the correlation is statistically significant.

Step-by-Step Examples

Let's start with a simple example and build up.

Example 1: Correlation between Two Variables (Pandas)

First, make sure you have the necessary libraries installed:

pip install pandas numpy scipy matplotlib seaborn

Now, let's calculate the correlation between two lists of numbers.

import pandas as pd

import numpy as np

# Sample data: Hours studied and exam score

hours_studied = [1, 2, 3, 4, 5, 6, 7, 8]

exam_score = [55, 60, 62, 70, 75, 80, 85, 90]

# Create a pandas DataFrame

df = pd.DataFrame({

'Hours_Studied': hours_studied,

'Exam_Score': exam_score

})

print("DataFrame:")

print(df)

print("\n")

# Calculate the correlation matrix

correlation_matrix = df.corr()

print("Correlation Matrix:")

print(correlation_matrix)

Output:

DataFrame:

Hours_Studied Exam_Score

0 1 55

1 2 60

2 3 62

3 4 70

4 5 75

5 6 80

6 7 85

7 8 90

Correlation Matrix:

Hours_Studied Exam_Score

Hours_Studied 1.000000 0.994092

Exam_Score 0.994092 1.000000The output is a matrix. The value at the intersection of Hours_Studied and Exam_Score is the correlation coefficient, which is approximately 994. This is a very strong positive correlation, as expected.

Example 2: Correlation with a P-value (SciPy)

To determine if this correlation is statistically significant (i.e., not just due to random chance), we use scipy.stats.pearsonr.

from scipy.stats import pearsonr

# The pearsonr function returns two values: the correlation coefficient and the p-value

corr_coefficient, p_value = pearsonr(df['Hours_Studied'], df['Exam_Score'])

print(f"Correlation Coefficient: {corr_coefficient:.4f}")

print(f"P-value: {p_value:.4f}")

# Interpretation

alpha = 0.05 # Significance level

if p_value < alpha:

print("\nThe correlation is statistically significant (p < 0.05).")

else:

print("\nThe correlation is not statistically significant (p >= 0.05).")

Output:

Correlation Coefficient: 0.9941

P-value: 0.0000

The correlation is statistically significant (p < 0.05).The extremely small p-value (close to 0) gives us high confidence that the strong positive correlation we observed is real.

Example 3: Correlation Matrix for a Larger Dataset

.corr() is most powerful when applied to a DataFrame with many columns. Let's create a more complex dataset.

# Create a sample DataFrame with multiple variables

data = {

'Age': [25, 30, 45, 22, 35, 50, 28, 40],

'Income': [50000, 62000, 95000, 48000, 75000, 110000, 58000, 85000],

'Experience': [2, 5, 20, 1, 8, 25, 3, 15],

'Satisfaction_Score': [7, 8, 6, 9, 7, 5, 8, 6]

}

df_multi = pd.DataFrame(data)

print("Multi-variable DataFrame:")

print(df_multi)

print("\n")

# Calculate the full correlation matrix

full_correlation_matrix = df_multi.corr()

print("Full Correlation Matrix:")

print(full_correlation_matrix)

Output:

Multi-variable DataFrame:

Age Income Experience Satisfaction_Score

0 25 50000 2 7

1 30 62000 5 8

2 45 95000 20 6

3 22 48000 1 9

4 35 75000 8 7

5 50 110000 25 5

6 28 58000 3 8

7 40 85000 15 6

Full Correlation Matrix:

Age Income Experience Satisfaction_Score

Age 1.000000 0.977436 0.991677 -0.938193

Income 0.977436 1.000000 0.968747 -0.913242

Experience 0.991677 0.968747 1.000000 -0.941741

Satisfaction_Score -0.938193 -0.913242 -0.941741 1.000000This matrix shows the correlation between every pair of variables. For instance, Age and Income have a strong positive correlation (0.977), while Age and Satisfaction_Score have a strong negative correlation (-0.938).

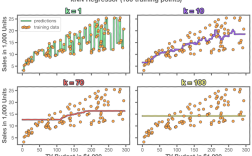

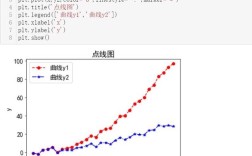

Visualizing Correlation (Crucial!)

A number is good, but a picture is often better. The best way to visualize correlations is with a heatmap.

Example: Heatmap with Seaborn

Seaborn makes creating beautiful heatmaps incredibly easy.

import seaborn as sns import matplotlib.pyplot as plt # Use the correlation matrix from the previous example full_correlation_matrix = df_multi.corr() # Create a heatmap plt.figure(figsize=(8, 6)) # Set the figure size sns.heatmap(full_correlation_matrix, annot=True, cmap='coolwarm', fmt=".2f") # Add titles and labels'Correlation Heatmap of Employee Data', fontsize=16) plt.show()

What this plot tells you:

annot=True: This writes the correlation coefficient value inside each cell.cmap='coolwarm': This color map uses red for positive correlations and blue for negative correlations. The intensity of the color represents the strength.- The plot immediately makes it obvious which variables are strongly related and in which direction.

Important Considerations & Pitfalls

-

Correlation vs. Causation: This is the most important rule. Ice cream sales and shark attacks are highly correlated in the summer, but one does not cause the other. A third variable (hot weather) causes both to increase.

-

Linearity: Pearson correlation only measures linear relationships. Your data could have a strong non-linear relationship (e.g., a U-shape) and still have a correlation coefficient near 0. Always visualize your data with a scatter plot!

# Example of a non-linear relationship with low Pearson correlation x = np.linspace(-10, 10, 100) y = x**2 # A perfect quadratic relationship # Scatter plot reveals the relationship plt.scatter(x, y) plt.title("Non-Linear Relationship") plt.show() # Pearson correlation will be close to 0 print(f"Pearson correlation for x and x^2: {pearsonr(x, y)[0]:.4f}") -

Outliers: A single outlier can dramatically inflate or deflate a correlation coefficient. Be sure to check for outliers in your data.

-

Type of Correlation:

- Pearson (

df.corr()orscipy.stats.pearsonr): The default. Measures linear correlation between two continuous variables. Assumes the data is roughly normally distributed. - Spearman: Measures monotonic relationships (whether linear or not). It's based on rank order and is more robust to outliers. Use it when your data isn't normally distributed or is ordinal.

# Calculate Spearman correlation with pandas spearman_corr = df_multi.corr(method='spearman') print("\nSpearman Correlation Matrix:") print(spearman_corr)

- Pearson (

Summary of Key Functions

| Goal | Function | Library | Key Output |

|---|---|---|---|

| Correlation between two columns | df['col_A'].corr(df['col_B']) |

Pandas | Single correlation coefficient |

| Correlation matrix for all numeric columns | df.corr() |

Pandas | DataFrame of correlations |

| Correlation + P-value for two columns | scipy.stats.pearsonr(col_A, col_B) |

SciPy | (correlation_coefficient, p_value) |

| Visualize correlation matrix | seaborn.heatmap(df.corr(), annot=True) |

Seaborn | A colored heatmap |