Of course! The term "Leave-One-Out" in Python most commonly refers to Leave-One-Out Cross-Validation (LOOCV), a powerful technique in machine learning for model evaluation.

Here's a complete guide covering:

- What is LOOCV? (The concept)

- How to implement it manually (to understand the mechanics)

- The best way: Using

scikit-learn(The practical, standard approach) - Pros and Cons of LOOCV

- When to use LOOCV

What is Leave-One-Out Cross-Validation (LOOCV)?

LOOCV is a special case of K-Fold Cross-Validation where the number of folds (K) is equal to the number of data points in your dataset.

The Process:

- Imagine you have a dataset with

Nsamples. - The model is trained

Ntimes. - In each iteration (

ifrom 1 toN):- Training Set: All data points except the

i-th one. - Testing Set: Only the

i-th data point.

- Training Set: All data points except the

- You end up with

Nperformance scores (e.g., accuracy, MSE). - The final performance of your model is the average of these

Nscores.

Example: If you have 100 data points, LOOCV will train 100 different models. Each model is trained on 99 samples and tested on the 1 sample that was left out.

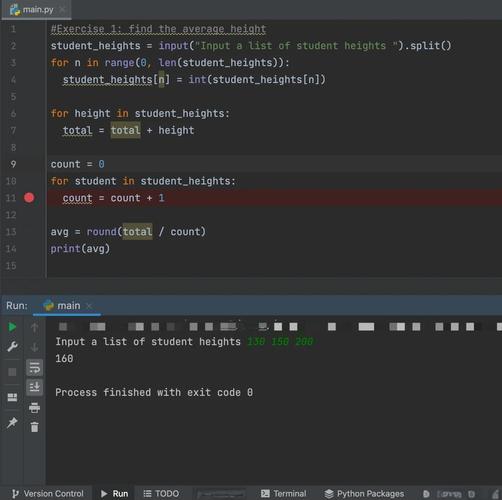

Manual Implementation (for understanding)

Let's write a simple LOOCV loop from scratch to see how it works. We'll use scikit-learn for the model but handle the splitting logic ourselves.

import numpy as np

from sklearn.linear_model import LinearRegression

from sklearn.datasets import make_regression

from sklearn.metrics import mean_squared_error

# 1. Create a sample dataset

X, y = make_regression(n_samples=10, n_features=1, noise=25, random_state=42)

print(f"Dataset shape: {X.shape}")

# Dataset shape: (10, 1)

# 2. Initialize the model

model = LinearRegression()

# 3. Prepare for LOOCV

n_samples = X.shape[0]

mse_scores = []

print("\n--- Starting Manual LOOCV ---")

# 4. Loop through each sample to be the "left-out" test set

for i in range(n_samples):

# Split data into training and testing sets

X_train = np.delete(X, i, axis=0)

y_train = np.delete(y, i, axis=0)

X_test = X[i].reshape(1, -1) # Reshape to 2D array for prediction

y_test = y[i]

# Train the model

model.fit(X_train, y_train)

# Make a prediction and calculate the error

y_pred = model.predict(X_test)

error = mean_squared_error(y_test, y_pred)

mse_scores.append(error)

print(f"Iteration {i+1}: Test on sample {i}, MSE = {error:.2f}")

# 5. Calculate the average performance

average_mse = np.mean(mse_scores)

std_mse = np.std(mse_scores)

print("\n--- LOOCV Results ---")

print(f"Mean Squared Error (MSE) from LOOCV: {average_mse:.2f}")

print(f"Standard Deviation of MSE: {std_mse:.2f}")

This manual approach is great for understanding the underlying logic, but it's inefficient and not recommended for real-world use.

The Best Way: Using scikit-learn's LeaveOneOut

scikit-learn provides a clean, efficient, and integrated way to perform LOOCV using its cross-validation utilities. This is the standard and recommended approach.

The key is to use LeaveOneOut as a splitter within the cross_val_score function, which handles the training and evaluation for you.

import numpy as np

from sklearn.linear_model import LinearRegression

from sklearn.datasets import make_regression

from sklearn.model_selection import cross_val_score, LeaveOneOut

# 1. Create the same sample dataset

X, y = make_regression(n_samples=10, n_features=1, noise=25, random_state=42)

# 2. Initialize the model

model = LinearRegression()

# 3. Create the LOOCV splitter object

loo = LeaveOneOut()

# 4. Use cross_val_score to perform LOOCV

# The scoring metric is negative MSE by default for regression, so we negate it back.

# 'neg_mean_squared_error' is used because cross_val_score tries to maximize scores,

# and MSE is a loss function (lower is better). Making it negative allows maximization.

scores = cross_val_score(model, X, y, cv=loo, scoring='neg_mean_squared_error')

# The scores are negative MSE, so we convert them back

mse_scores = -scores

# 5. Calculate the average performance

average_mse = np.mean(mse_scores)

std_mse = np.std(mse_scores)

print("--- LOOCV using scikit-learn ---")

print(f"MSE scores for each fold: {mse_scores.round(2)}")

print(f"Mean Squared Error (MSE) from LOOCV: {average_mse:.2f}")

print(f"Standard Deviation of MSE: {std_mse:.2f}")

A More Complex Example (Classification)

LOOCV works for classification too. Here's an example with a LogisticRegression model.

import numpy as np

from sklearn.model_selection import LeaveOneOut, cross_val_score

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import load_iris

# 1. Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# 2. Initialize the classifier

# solver='liblinear' is needed for small datasets like this

model = LogisticRegression(solver='liblinear', multi_class='auto', max_iter=200)

# 3. Create the LOOCV splitter

loo = LeaveOneOut()

# 4. Perform LOOCV for classification

# 'accuracy' is the default scoring for classification

accuracy_scores = cross_val_score(model, X, y, cv=loo)

# 5. Calculate the average performance

average_accuracy = np.mean(accuracy_scores)

std_accuracy = np.std(accuracy_scores)

print("--- LOOCV for Classification ---")

print(f"Number of iterations (folds): {len(accuracy_scores)}")

print(f"Accuracy scores for each fold: {accuracy_scores}")

print(f"Mean Accuracy from LOOCV: {average_accuracy:.4f}")

print(f"Standard Deviation of Accuracy: {std_accuracy:.4f}")

Pros and Cons of LOOCV

Advantages:

- Unbiased Performance Estimate: Since almost all the data is used for training in each iteration (N-1 out of N samples), the performance score is a very low-bias estimate of how the model will perform on unseen data.

- Deterministic: There's no randomness in the splits (unlike K-Fold with

shuffle=True), so you will always get the exact same result if you run it again on the same data.

Disadvantages:

- Computationally Expensive: This is the biggest drawback. If you have 100,000 data points, you have to train 100,000 models. This can be prohibitively slow for large datasets or complex models (like deep neural networks).

- High Variance: The performance estimate can have high variance. Because each training set is so similar to the others (they differ by only one sample), the performance scores from each fold can be highly correlated. This can lead to a less stable final estimate compared to, say, 10-Fold CV.

When to Use LOOCV?

Use LOOCV when:

- Your dataset is small. With small datasets, you want to use as much data as possible for training, and LOOCV is perfect for this.

- You need a nearly unbiased performance estimate. The low bias is a significant advantage.

- Computational cost is not a concern. If training your model is fast and your dataset is manageable, LOOCV is an excellent choice.

When to avoid LOOCV:

- Your dataset is large. For datasets with thousands or millions of samples, the computational cost is too high. Use K-Fold Cross-Validation (with

K=5orK=10) instead. It provides a great balance between bias and variance and is much faster. - Your model is very slow to train. Even on medium-sized datasets, a slow model makes LOOCV impractical.