Of course. A "Python OutOfMemory" error is a common and critical issue that can halt your program unexpectedly. Let's break down what it is, why it happens, and how to diagnose and fix it.

What is an OutOfMemory Error?

In Python, this error is formally known as a MemoryError. It occurs when your program attempts to allocate more memory from the operating system than is currently available.

Think of your computer's RAM as a fixed-size container. Your Python program is a process that needs space to run. When it tries to store data (like a huge list, a massive NumPy array, or a complex object graph) and there isn't enough free RAM left, the Python interpreter raises a MemoryError.

Common Causes of MemoryError

Here are the most frequent scenarios that lead to running out of memory in Python:

a) Loading a Large Dataset into Memory

This is the #1 cause. You try to read an entire file (e.g., a multi-gigabyte CSV, JSON, or Parquet file) or a large database table into a single Python object like a list or a pandas DataFrame.

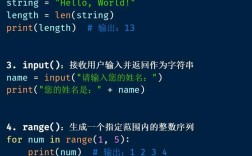

# Example: Reading a huge CSV file

# If the file is 10GB and you have only 8GB of RAM, this will fail.

import pandas as pd

df = pd.read_csv('a_very_large_file.csv')

b) Creating Massive In-Memory Data Structures

Sometimes, the problem isn't a file, but the way you generate or store data in memory.

# Example: Creating a list with a billion integers # Each integer in Python is ~24-28 bytes. # 1,000,000,000 * 28 bytes = ~28 GB of RAM! # huge_list = [0] * 1_000_000_000 # This will likely cause a MemoryError

c) Memory Leaks

A memory leak happens when your program allocates memory but fails to release it when it's no longer needed. Over time, the program consumes more and more memory until it crashes.

Common causes of leaks:

- Circular References: Objects that reference each other in a cycle can sometimes prevent the Python Garbage Collector (GC) from cleaning them up.

- Caching Issues: A cache that grows indefinitely without any eviction policy.

- Global Variables: Storing large objects in global variables that are never deleted.

# Simplified example of a circular reference

class Node:

def __init__(self, value):

self.value = value

self.next = None

node1 = Node(1)

node2 = Node(2)

node1.next = node2

node2.next = node1 # Now node1 and node2 point to each other

# The GC might have trouble cleaning this up immediately.

d) Inefficient Data Structures

Using a data structure that consumes more memory than necessary for your task.

# Example: Using a list of dictionaries instead of a structured array

# For 1 million records, this can use significantly more RAM

# than a NumPy array or a structured pandas DataFrame.

list_of_dicts = [{'id': i, 'value': i * 2} for i in range(1_000_000)]

e) External Library Issues

Some libraries, especially those with C/C++ backends (like NumPy, Pandas, TensorFlow, PyTorch), can manage their own memory. If you're not careful with their configurations, they can consume all available RAM and cause the OS to kill the Python process.

How to Diagnose and Fix Memory Issues

Here's a step-by-step guide to tackling a MemoryError.

Step 1: Reproduce the Issue Reliably

Before you can fix it, you need to be able to reproduce the error. Make a minimal, runnable script that causes the MemoryError. This is crucial for testing your solutions.

Step 2: Measure Memory Usage

You can't optimize what you can't measure. Use these tools to see where your memory is going.

a) Using memory_profiler (Line-by-line profiling)

This is excellent for finding which specific line of code is consuming the most memory.

-

Install:

pip install memory_profiler -

Decorate your function:

from memory_profiler import profile @profile def my_function(): # Your code here data = [i for i in range(1_000_000)] return data if __name__ == '__main__': my_function() -

Run:

python -m memory_profiler your_script.pyThis will give you a line-by-line breakdown of memory consumption.

b) Using tracemalloc (Snapshot comparison)

This is a built-in Python module to trace memory allocations. It's great for finding the source of a leak.

-

Code Example:

import tracemalloc def create_objects(): # This function creates a lot of objects return [i * 2 for i in range(1_000_000)] tracemalloc.start() # Take a snapshot before the memory-intensive operation snapshot1 = tracemalloc.take_snapshot() # Run the code large_list = create_objects() # Take a snapshot after snapshot2 = tracemalloc.take_snapshot() # Compare the snapshots top_stats = snapshot2.compare_to(snapshot1, 'lineno') print("[ Top 10 differences ]") for stat in top_stats[:10]: print(stat)This will show you which lines allocated the most memory between the two snapshots.

c) Using psutil (System-wide monitoring)

Use this to monitor the process's memory consumption in real-time.

import psutil

import time

process = psutil.Process()

print(f"Memory used: {process.memory_info().rss / (1024 * 1024):.2f} MB")

# Do some work...

time.sleep(2)

print(f"Memory used after work: {process.memory_info().rss / (1024 * 1024):.2f} MB")

Step 3: Implement Solutions

Based on your diagnosis, here are the common fixes.

Solution 1: Process Data in Chunks (for large files) Don't load the entire file at once. Read it in manageable pieces.

# Instead of:

# df = pd.read_csv('huge_file.csv')

# Do this:

chunk_size = 100_000

chunks = pd.read_csv('huge_file.csv', chunksize=chunk_size)

# Process each chunk one by one

for i, chunk in enumerate(chunks):

print(f"Processing chunk {i+1}")

# Do your analysis on the 'chunk' DataFrame

# For example, calculate the mean of a column

# chunk['column_name'].mean()

Solution 2: Use More Memory-Efficient Data Types

- Pandas: Use

dtypeparameter to specify smaller data types.# Default int64 can be 8 bytes. int32 is 4, int16 is 2. df = pd.read_csv('file.csv', dtype={'id_column': 'int32'}) - NumPy: Use

np.arraywith a specificdtype.# Instead of np.array([1, 2, 3]) -> defaults to int64 arr = np.array([1, 2, 3], dtype=np.int32) # Uses half the memory

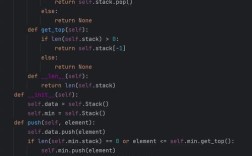

Solution 3: Identify and Fix Memory Leaks

- Use

tracemallocas described above to pinpoint the source of the leak. - Break circular references: Manually set objects to

Nonewhen you're done with them to help the garbage collector. - Use weak references (

weakref): For caches, you can useweakref.WeakValueDictionaryso that items are automatically removed from the cache when they are no longer referenced elsewhere in your program.

Solution 4: Use Generators Instead of Lists

Generators (yield) produce items one at a time and don't store the entire sequence in memory. This is perfect for loops.

# Memory-intensive list comprehension

def create_list(n):

return [i * 2 for i in range(n)]

# Memory-efficient generator

def create_generator(n):

for i in range(n):

yield i * 2

# Using the list

# my_list = create_list(1_000_000_000) # Will use a lot of memory

# Using the generator

# for value in create_generator(1_000_000_000):

# # Only one value is in memory at a time

# pass

Solution 5: Optimize for Libraries (e.g., TensorFlow/PyTorch) If you're using deep learning frameworks, you might be running out of GPU memory. The solution is often different:

- Use

model.to('cpu')to move the model to CPU. - Use

torch.cuda.empty_cache()to clear unused GPU memory. - Reduce the

batch_sizeduring training.

Solution 6: Scale Up (The Hardware Fix) If your algorithm is correct and memory-efficient, but the dataset is simply too large for your machine, the ultimate solution is to get more RAM. For truly massive datasets, consider out-of-core processing or distributed computing frameworks like Dask or Spark.

Summary: A Troubleshooting Checklist

- Isolate: Create a minimal script that reproduces the error.

- Profile: Use

memory_profilerortracemallocto find the exact line or object causing the high memory usage. - Analyze: Is it a large file? A leak? An inefficient data type?

- Fix:

- For large files: Process in chunks.

- For leaks: Find the source with

tracemallocand fix it. - For large objects: Use more efficient types or generators.

- For libraries: Check their specific memory management options.

- Scale: If all else fails, consider more hardware or distributed computing.