Of course! pomegranate is a fantastic and powerful Python library for probabilistic modeling. It's particularly known for its speed, ease of use, and the fact that it's built on top of NumPy, making it very efficient for scientific computing.

Here's a comprehensive guide covering what it is, why you'd use it, its key features, and code examples.

What is Pomegranate?

At its core, Pomegranate is a library for probabilistic modeling. It provides a wide range of models that can be used to:

- Model data: Understand the underlying distribution or patterns in your data.

- Make predictions: Classify new data points or predict future values.

- Perform inference: Answer questions about the data given the model.

Its key strengths are:

- Speed: It's often significantly faster than scikit-learn for certain tasks, especially those involving Naive Bayes and Hidden Markov Models (HMMs). This is because it's implemented in Cython and optimized for performance.

- Unified API: All models in the library share a similar, intuitive API (

model.fit(),model.predict(),model.probability()), making it easy to switch between different models. - Flexibility: You can build complex models by combining simpler ones (e.g., a Generalized Mixture Model).

- Focus on Probabilistic Models: It excels at models that are based on probability theory, like Naive Bayes, HMMs, and Bayesian Networks.

Installation

You can install pomegranate using pip. It's a good idea to also install NumPy and SciPy, as they are its core dependencies.

pip install pomegranate

Core Concepts and Key Models

Let's go through some of the most important models in pomegranate with code examples.

Naive Bayes

This is a classic classification algorithm based on Bayes' theorem with a "naive" assumption of independence between features. Pomegranate is famous for its highly optimized Naive Bayes implementations.

Use Case: Text classification, spam detection, medical diagnosis.

Example: Gaussian Naive Bayes This assumes the features follow a normal (Gaussian) distribution. It's great for continuous numerical data.

import numpy as np

from pomegranate import NaiveBayes, NormalDistribution

# 1. Create some sample data

# Class 0: centered around (0, 0)

X0 = np.random.randn(1000, 2)

y0 = np.zeros(1000)

# Class 1: centered around (5, 5)

X1 = np.random.randn(1000, 2) + [5, 5]

y1 = np.ones(1000)

X = np.vstack([X0, X1])

y = np.hstack([y0, y1])

# 2. Define the model components

# Each class is modeled by a multivariate distribution (here, two independent Gaussians)

d0 = NormalDistribution([0, 0], [[1, 0], [0, 1]])

d1 = NormalDistribution([5, 5], [[1, 0], [0, 1]])

# 3. Create and train the Naive Bayes model

model = NaiveBayes([d0, d1])

model.fit(X, y)

# 4. Make predictions

# A new point near the origin should be classified as 0

print("Prediction for (0, 0):", model.predict(np.array([[0, 0]]))) # Expected: [0]

# A new point near (5, 5) should be classified as 1

print("Prediction for (5, 5):", model.predict(np.array([[5, 5]]))) # Expected: [1]

# Get the probabilities of belonging to each class

probs = model.predict_proba(np.array([[0, 0]]))

print("Probabilities for (0, 0):\n", probs)

Hidden Markov Models (HMMs)

HMMs are powerful for modeling sequential data. They assume the system being modeled is a Markov process with hidden (unobservable) states.

Use Case: Speech recognition, part-of-speech tagging, bioinformatics (gene finding), financial market analysis.

Example: A Simple Weather Model Let's model the weather (hidden states) based on what we observe (activities).

- Hidden States:

Sunny,Rainy - Observations:

walk,shop,clean

from pomegranate import HiddenMarkovModel, State, DiscreteDistribution

# 1. Define the hidden states and their emission probabilities

# Sunny state

sunny_dist = DiscreteDistribution({'walk': 0.6, 'shop': 0.3, 'clean': 0.1})

sunny = State(sunny_dist, name='sunny')

# Rainy state

rainy_dist = DiscreteDistribution({'walk': 0.1, 'shop': 0.4, 'clean': 0.5})

rainy = State(rainy_dist, name='rainy')

# 2. Create the HMM model

model = HiddenMarkovModel('Weather')

# 3. Add the states to the model

model.add_states(sunny, rainy)

# 4. Add transitions between states

# Start probability: more likely to start sunny

model.add_transition(model.start, sunny, 0.8)

model.add_transition(model.start, rainy, 0.2)

# Transition probabilities

# It's more likely to stay sunny than become rainy

model.add_transition(sunny, sunny, 0.7)

model.add_transition(sunny, rainy, 0.3)

# It's more likely to stay rainy than become sunny

model.add_transition(rainy, rainy, 0.6)

model.add_transition(rainy, sunny, 0.4)

# 5. "Bake" the model to finalize the structure

model.bake()

# 6. Use the model

# Calculate the probability of a sequence of observations

sequence = ['walk', 'shop', 'clean', 'walk']

probability = model.probability(sequence)

print(f"Probability of sequence {sequence}: {probability:.4f}")

# Find the most likely sequence of hidden states (the Viterbi path)

# This answers "What was the most likely weather sequence that led to these activities?"

path, path_probability = model.viterbi(sequence)

print(f"Most likely hidden state path: {path}")

Generalized Mixture Models (GMM)

A Mixture Model is a probabilistic model for representing the presence of subpopulations within an overall population. Unlike K-Means, which gives "hard" assignments, GMMs give "soft" assignments (probabilities) of belonging to each cluster.

Use Case: Clustering, density estimation, anomaly detection.

Example: Clustering Data

import numpy as np

from pomegranate import GeneralMixtureModel, NormalDistribution

# 1. Create sample data from three different distributions

X1 = np.random.normal([0, 0], [[1, 0], [0, 1]], 500)

X2 = np.random.normal([5, 5], [[1, 0], [0, 1]], 500)

X3 = np.random.normal([-5, 5], [[1, 0], [0, 1]], 500)

X = np.vstack([X1, X2, X3])

# 2. Create a GMM with 3 components

# Each component is a multivariate Gaussian distribution

gmm = GeneralMixtureModel.from_samples(

NormalDistribution,

n_components=3,

X=X

)

# 3. Predict cluster assignments for each data point

# predict() returns the index of the most likely component (hard clustering)

labels = gmm.predict(X)

print("First 10 hard labels:", labels[:10])

# predict_proba() returns the probability of belonging to each component (soft clustering)

probs = gmm.predict_proba(X)

print("First 10 soft probabilities:\n", probs[:10])

# 4. Generate new data samples from the learned model

new_samples = gmm.sample(10)

print("10 new samples from the GMM:\n", new_samples)

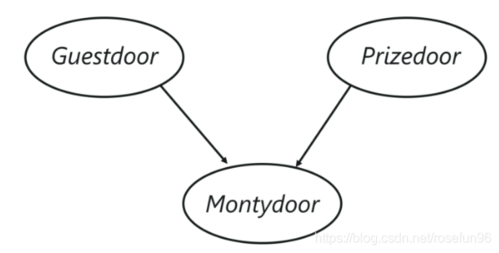

Bayesian Networks

Bayesian Networks are a type of probabilistic graphical model that represent a set of variables and their conditional dependencies via a directed acyclic graph (DAG).

Use Case: Causal inference, risk analysis, decision support systems.

Example: A Simple "Student" Network

This models the relationship of a student's exam grade (Grade) based on their intelligence (Intelligence) and the difficulty of the exam (Difficulty).

from pomegranate import BayesianNetwork, DiscreteDistribution, Node

# 1. Define the probability distributions for each node

# Difficulty: Easy or Hard

difficulty_dist = DiscreteDistribution({'easy': 0.6, 'hard': 0.4})

# Intelligence: High or Low

intelligence_dist = DiscreteDistribution({'high': 0.7, 'low': 0.3})

# Grade: A, B, or C. Its probability depends on Difficulty and Intelligence.

# P(Grade | Difficulty, Intelligence)

grade_dist = DiscreteDistribution({

('easy', 'high'): {'A': 0.7, 'B': 0.2, 'C': 0.1},

('easy', 'low'): {'A': 0.4, 'B': 0.4, 'C': 0.2},

('hard', 'high'): {'A': 0.2, 'B': 0.5, 'C': 0.3},

('hard', 'low'): {'A': 0.05, 'B': 0.25, 'C': 0.7}

})

# 2. Create nodes

difficulty_node = Node(difficulty_dist, name='difficulty')

intelligence_node = Node(intelligence_dist, name='intelligence')

grade_node = Node(grade_dist, name='grade')

# 3. Create the Bayesian Network

model = BayesianNetwork("Student Model")

model.add_nodes(difficulty_node, intelligence_node, grade_node)

# 4. Define the edges (conditional dependencies)

# Grade depends on Difficulty and Intelligence

model.add_edge(difficulty_node, grade_node)

model.add_edge(intelligence_node, grade_node)

# 5. Bake the model

model.bake()

# 6. Use the model for inference

# What is the probability of getting an 'A'?

print("P(Grade = A):", model.probability({'grade': 'A'}))

# What is the probability of the exam being 'hard' given that the grade was a 'C'?

# This is a conditional query.

print("P(Difficulty = hard | Grade = C):", model.predict_proba({'grade': 'C'})['difficulty']['hard'])

Pomegranate vs. Scikit-learn

This is a common point of comparison.

| Feature | Pomegranate | Scikit-learn |

|---|---|---|

| Primary Focus | Probabilistic models and inference. A broader, general-purpose machine learning library. | |

| Speed | Often much faster, especially for Naive Bayes and HMMs, due to Cython backend. | Very fast, but may not match pomegranate's specialized optimizations for certain models. |

| API | Unified, probabilistic-first API (fit, predict, probability, sample). |

Unified, but more general-purpose API. Some models have different parameter names. |

| Models | Strong in Naive Bayes, HMMs, Bayesian Networks, GMMs. | Huge variety: SVMs, Random Forests, Gradient Boosting, PCA, etc. |

| Flexibility | Excellent for building complex probabilistic models by combining simpler ones. | More focused on standalone models. Pipelines are a key feature for chaining steps. |

| Learning Curve | Can be steeper if you're new to probabilistic concepts. | Generally considered more accessible for beginners in ML. |

When to choose Pomegranate:

- Your problem is inherently probabilistic.

- You are working with sequential data (HMMs).

- You need a fast Naive Bayes implementation.

- You want to perform Bayesian inference on a graphical model.

When to choose Scikit-learn:

- You need a wide variety of standard ML algorithms (SVMs, Trees, etc.).

- You are building a standard machine learning pipeline.

- You are a beginner and want a more general-purpose tool.

Summary

Pomegranate is a specialized, high-performance library that should be in your toolbox if you work with probabilistic models. Its speed and elegant API make it a joy to use for tasks like Naive Bayes classification, Hidden Markov Modeling, and Bayesian Network analysis. While scikit-learn is the go-to for general-purpose ML, pomegranate shines when the problem is deeply rooted in probability and statistics.