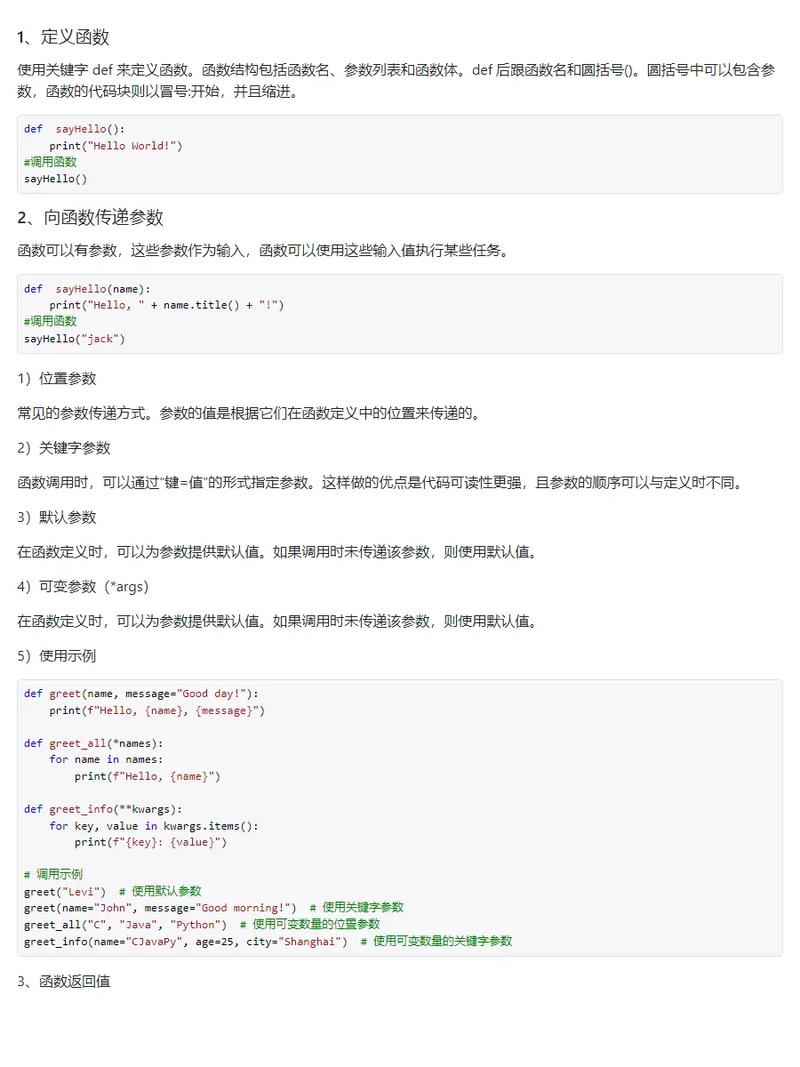

Of course! Let's break down how to handle iframe elements using Python's urllib library.

First, a crucial point: urllib is a low-level library. It's excellent for fetching raw HTML from a URL, but it doesn't understand the structure of HTML (like tags, classes, or iframes). To parse the HTML and find iframes, you need to combine urllib with an HTML parser like Beautiful Soup.

Here’s a complete guide, from the basic concept to a practical, robust example.

The Core Concept: A Two-Step Process

- Fetch the Content: Use

urllib.requestto download the HTML of the webpage. You get a block of text. - Parse the Content: Use an HTML parser (like Beautiful Soup) to read that text, find all

<iframe>tags, and extract thesrcattribute, which contains the URL of the embedded content.

Step 1: Install Necessary Libraries

You'll need beautifulsoup4 and lxml (a fast and forgiving parser).

pip install beautifulsoup4 pip install lxml

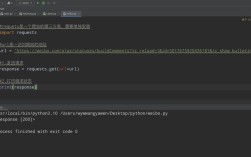

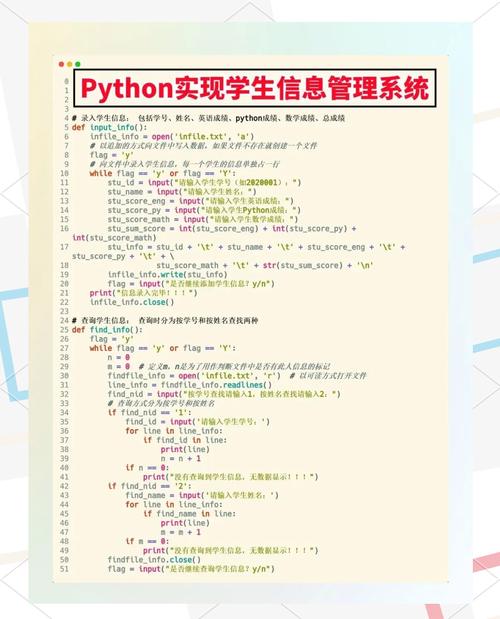

Step 2: The Basic Code (Fetching and Parsing)

This script will fetch the HTML from a URL, find all iframes, and print their source URLs.

import urllib.request

from bs4 import BeautifulSoup

# The URL of the page you want to scrape

# Let's use a page that is known to have iframes for this example

url = 'https://www.w3schools.com/html/html_iframe.asp'

try:

# Step 1: Fetch the HTML content of the page

# We use a User-Agent to mimic a real browser, which can help avoid blocks

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

req = urllib.request.Request(url, headers=headers)

with urllib.request.urlopen(req) as response:

html_content = response.read()

# Step 2: Parse the HTML and find iframes

# The 'lxml' parser is fast and recommended. You can also use 'html.parser'.

soup = BeautifulSoup(html_content, 'lxml')

# Find all <iframe> tags in the parsed HTML

iframes = soup.find_all('iframe')

# Check if any iframes were found

if iframes:

print(f"Found {len(iframes)} iframe(s) on the page.")

print("-" * 30)

# Loop through each iframe found

for i, iframe in enumerate(iframes):

# The 'src' attribute contains the URL of the embedded content

iframe_src = iframe.get('src')

if iframe_src:

print(f"Iframe #{i+1}:")

print(f" Source URL: {iframe_src}")

print(f" Full URL (if relative): {urllib.parse.urljoin(url, iframe_src)}")

else:

print(f"Iframe #{i+1} found, but it has no 'src' attribute.")

else:

print("No iframes found on this page.")

except urllib.error.URLError as e:

print(f"Error fetching the URL: {e}")

except Exception as e:

print(f"An unexpected error occurred: {e}")

Explanation of the Code:

import urllib.requestandfrom bs4 import BeautifulSoup: Imports the necessary modules.headers = {'User-Agent': ...}: Many websites block requests that don't look like they're coming from a standard web browser. Setting aUser-Agentheader helps prevent your script from being blocked.urllib.request.Request(url, headers=headers): Creates a request object with the specified headers.with urllib.request.urlopen(req) as response:: Opens the URL and reads the response. Thewithstatement ensures the connection is properly closed.html_content = response.read(): Reads the raw HTML data from the response.soup = BeautifulSoup(html_content, 'lxml'): Creates a BeautifulSoup object, which parses the HTML and allows for easy searching.iframes = soup.find_all('iframe'): This is the key line. It searches the parsed HTML for every tag namediframeand returns a list of all found elements.iframe.get('src'): For eachiframeelement, this safely retrieves the value of itssrcattribute.urllib.parse.urljoin(url, iframe_src): This is a very useful function. If thesrcis a relative URL (e.g.,/path/to/page.html),urljoincombines it with the baseurlto create a full, absolute URL (e.g.,https://www.w3schools.com/path/to/page.html).

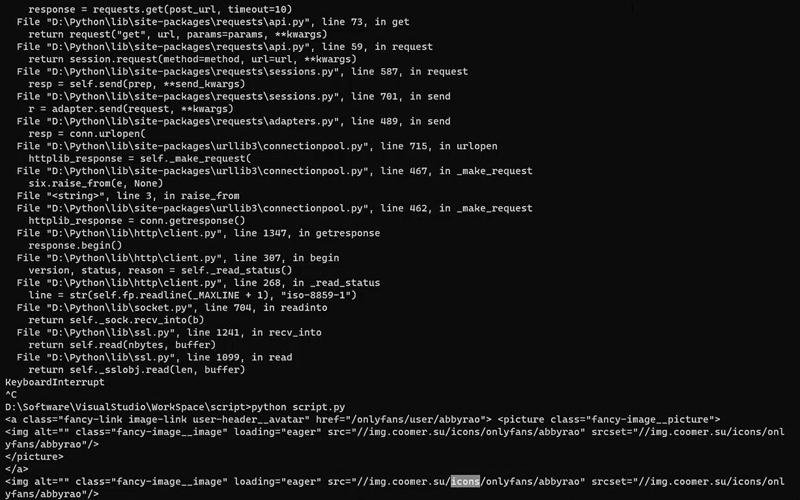

Step 3: Advanced Handling (JavaScript-Rendered Content)

A major limitation of urllib + Beautiful Soup is that they do not execute JavaScript. Many modern websites use JavaScript to dynamically load content, including the src attribute of iframes.

If you try to scrape a site like this, you will find <iframe> tags, but their src attributes might be empty (src="") or contain a generic placeholder. The real URL is set by JavaScript after the page loads.

To solve this, you need a tool that can control a web browser. The best library for this in Python is Selenium.

Selenium Example

First, install Selenium and a WebDriver (e.g., for Chrome).

pip install selenium # Make sure you have Chrome installed and the latest ChromeDriver # You can manage drivers with libraries like webdriver-manager pip install webdriver-manager

Here’s how you'd do the same task with Selenium:

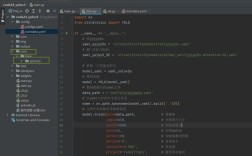

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

import time

url = 'https://www.w3schools.com/html/html_iframe.asp'

# Setup Selenium WebDriver

service = Service(ChromeDriverManager().install())

driver = webdriver.Chrome(service=service)

try:

print(f"Fetching {url} with Selenium...")

driver.get(url)

# Wait for a few seconds to allow JavaScript to execute

# This is a simple wait; for more robust scripts, use WebDriverWait

print("Waiting for JavaScript to render content...")

time.sleep(5)

# Get the page source AFTER JavaScript has run

html_content = driver.page_source

# Now parse the HTML with Beautiful Soup (same as before)

soup = BeautifulSoup(html_content, 'lxml')

iframes = soup.find_all('iframe')

if iframes:

print(f"\nFound {len(iframes)} iframe(s) on the page.")

print("-" * 30)

for i, iframe in enumerate(iframes):

iframe_src = iframe.get('src')

if iframe_src:

print(f"Iframe #{i+1}:")

print(f" Source URL: {iframe_src}")

else:

print(f"Iframe #{i+1} found, but its 'src' is still empty. JS might be blocking it.")

else:

print("\nNo iframes found.")

finally:

# Always close the browser window

driver.quit()

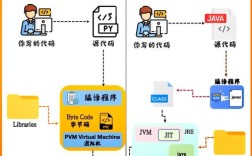

Summary: urllib vs. Selenium

| Feature | urllib + Beautiful Soup |

Selenium |

|---|---|---|

| How it Works | Fetches raw HTML from the server. | Controls a real web browser (Chrome, Firefox, etc.). |

| JavaScript | ❌ No. Cannot execute JS. | ✅ Yes. Executes all JS, just like a real user. |

| Speed | Very Fast. Ideal for large-scale scraping of simple sites. | Slow. Starts a browser, which is resource-intensive. |

| Use Case | Perfect for websites where the content (including iframe src) is present in the initial HTML response. |

Necessary for modern, dynamic websites that rely on JavaScript to load content. |

| Dependencies | urllib (built-in), beautifulsoup4, lxml |

selenium, webdriver-manager, a browser driver. |

Recommendation:

- Start with

urllibandBeautiful Soup. It's simpler and much faster. - If you find that the iframe

srcattributes are empty or incorrect, switch toSelenium. This is the most common sign that JavaScript is involved.