Of course! "SimpleCache" in Python usually refers to a simple, in-memory caching mechanism. It's a fantastic way to speed up your application by storing the results of expensive function calls and returning the cached result when the same inputs occur again.

Here's a breakdown, starting with the simplest manual approach and moving to more robust and Pythonic solutions.

The Simplest Manual Cache (Using a Dictionary)

This is the most basic form of caching. You manually create a dictionary to store results.

How it works:

- Create a dictionary to act as your cache.

- Before running a function's logic, check if the input (key) is already in the cache.

- If it is, return the cached value.

- If not, run the logic, store the result in the cache, and then return it.

Example: Caching a Fibonacci calculation

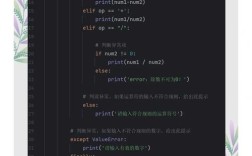

# The cache dictionary

fib_cache = {}

def fibonacci_manual(n):

"""

Calculates the nth Fibonacci number with a manual cache.

"""

print(f"Calculating fibonacci({n})...") # To show when the calculation happens

# Check if the result is already in the cache

if n in fib_cache:

return fib_cache[n]

# Base cases

if n <= 1:

return n

# Calculate, store, and return

result = fibonacci_manual(n - 1) + fibonacci_manual(n - 2)

fib_cache[n] = result # Store the result in the cache

return result

# --- Let's test it ---

print(f"fibonacci(10) = {fibonacci_manual(10)}")

print("\n--- Running it again ---")

print(f"fibonacci(10) = {fibonacci_manual(10)}")

Output:

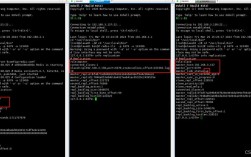

Calculating fibonacci(10)...

Calculating fibonacci(9)...

Calculating fibonacci(8)...

Calculating fibonacci(7)...

Calculating fibonacci(6)...

Calculating fibonacci(5)...

Calculating fibonacci(4)...

Calculating fibonacci(3)...

Calculating fibonacci(2)...

Calculating fibonacci(1)...

Calculating fibonacci(0)!

fibonacci(10) = 55

--- Running it again ---

fibonacci(10) = 55Notice that on the second run, the "Calculating..." messages don't appear because the result was retrieved instantly from the fib_cache.

Pros:

- Easy to understand the core concept of caching.

Cons:

- Not thread-safe. If multiple threads access

fib_cachesimultaneously, you could get race conditions. - Not automatically cleared. The cache will grow indefinitely and consume memory forever.

- Verbose. You have to add the caching logic to every function you want to cache.

The Pythonic Way: functools.lru_cache

For almost all general-purpose caching needs in Python, you should use the built-in functools.lru_cache. It's a decorator that handles all the hard work for you.

lru_cache stands for "Least Recently Used." It automatically discards the least recently used items when the cache reaches a maximum size.

How to use it:

Simply add @lru_cache() to the top of your function. You can specify a maxsize (the maximum number of items to store). If maxsize is set to None, the cache can grow indefinitely.

Example: The same Fibonacci function, but much simpler

import functools

@functools.lru_cache(maxsize=128) # Use a maxsize to prevent memory issues

def fibonacci_lru(n):

"""

Calculates the nth Fibonacci number using lru_cache.

"""

print(f"Calculating fibonacci({n})...")

# Base cases

if n <= 1:

return n

# The recursive call is automatically cached!

return fibonacci_lru(n - 1) + fibonacci_lru(n - 2)

# --- Let's test it ---

print(f"fibonacci(10) = {fibonacci_lru(10)}")

print("\n--- Running it again ---")

print(f"fibonacci(10) = {fibonacci_lru(10)}")

# You can inspect the cache

print("\n--- Cache Info ---")

print(fibonacci_lru.cache_info())

Output:

Calculating fibonacci(10)...

Calculating fibonacci(9)...

Calculating fibonacci(8)...

Calculating fibonacci(7)...

Calculating fibonacci(6)...

Calculating fibonacci(5)...

Calculating fibonacci(4)...

Calculating fibonacci(3)...

Calculating fibonacci(2)...

Calculating fibonacci(1)...

Calculating fibonacci(0)!

fibonacci(10) = 55

--- Running it again ---

fibonacci(10) = 55

--- Cache Info ---

CacheInfo(hits=9, misses=11, maxsize=128, currsize=11)hits: How many times a cached value was returned.misses: How many times the function had to be executed (because the result wasn't in the cache).

Pros:

- Extremely simple to use. Just one line of code.

- Thread-safe. It's built-in and robust.

- Memory efficient. You can set a

maxsizeto prevent unbounded memory growth. - Fast. It's implemented in C for high performance.

- Built-in. No external libraries needed.

Cons:

- It's an in-memory cache, so data is lost when the program restarts.

When to Use Other Caching Solutions

While lru_cache is perfect for in-memory function caching, you might need other tools for different scenarios.

| Scenario | Recommended Solution | Why? |

|---|---|---|

| In-memory function caching | @functools.lru_cache |

It's the standard, built-in, and most efficient way. |

| Caching across multiple processes | @functools.cached_property (for objects) or libraries like diskcache |

lru_cache is not process-safe. diskcache uses the filesystem to share a cache. |

| Caching web pages/API responses | Framework-specific (e.g., @cache_page in Django) or cachetools |

These are designed for the web context and handle HTTP headers like Cache-Control. |

| Persistent caching (survives restarts) | diskcache or joblib.Memory |

These libraries save your cache to the disk so it can be reloaded later. |

| Distributed caching (across servers) | Redis or Memcached | These are dedicated, high-performance in-memory data stores that act as a shared cache for a large application. |

Summary and Recommendation

For a "simple cache" in Python, your default choice should be functools.lru_cache.

It's the perfect balance of simplicity, performance, and robustness for caching the results of pure functions within a single Python process.

Use the manual dictionary approach only if you need very fine-grained control that lru_cache doesn't provide, or for learning purposes.