Of course! Let's break down convolution in Python, from the core concept to practical implementations using the most popular libraries.

What is Convolution? (The Intuition)

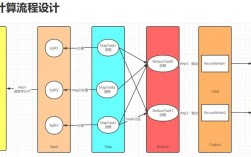

At its heart, convolution is a mathematical operation that slides a "filter" (or "kernel") over an input to produce an output feature map.

Think of it like this:

- Input: An image (represented as a 2D grid of pixel values).

- Filter: A small, square grid of numbers (e.g., 3x3 or 5x5). This filter is designed to detect a specific feature.

- Operation: You place the filter on the top-left corner of the image. You multiply the filter values by the corresponding image pixel values, sum them up, and write that sum in the output grid. Then, you slide the filter one pixel to the right and repeat the process until you've scanned the entire image.

A Simple Example: Let's say we want to detect horizontal lines in a very simple image.

Image (Input):

[[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[1, 1, 1, 1, 1], <-- A horizontal line

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0]]Filter (Kernel): This filter is designed to respond strongly when it sees a bright pixel on top of a dark pixel.

[[ 1, 1, 1],

[ 0, 0, 0],

[-1, -1, -1]]Convolution Process:

When the filter is centered on the line, the calculation would be:

(1*1 + 1*1 + 1*1) + (0*1 + 0*1 + 0*1) + (-1*0 + -1*0 + -1*0) = 3 + 0 + 0 = 3

This high value (3) in the output feature map indicates a strong presence of a horizontal line at that location.

The Mathematical Definition

For a 2D discrete function f (our image) and a 2D kernel g (our filter), the convolution (f * g) at point (i, j) is:

$$(f * g)(i, j) = \sum{m} \sum{n} f(i-m, j-n) \cdot g(m, n)$$

Don't worry too much about the formula. The key takeaway is that it's a sliding dot product.

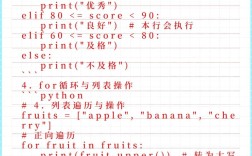

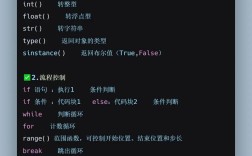

Implementation in Python

You have several options, depending on your needs:

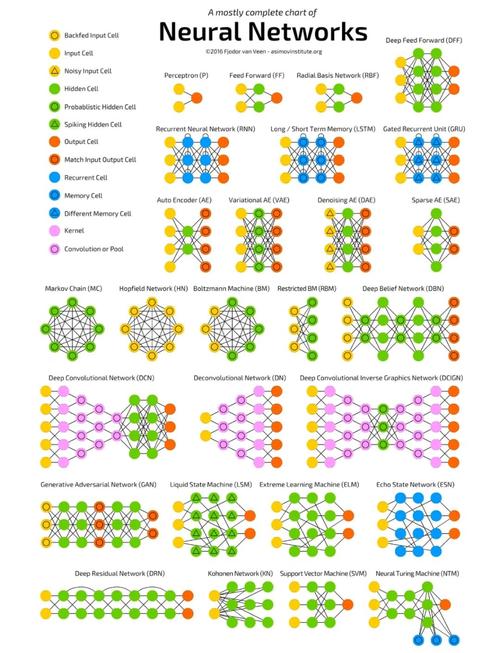

- NumPy: For understanding the fundamentals and doing it manually.

- SciPy: A more efficient and feature-rich implementation than NumPy.

- Deep Learning Frameworks (PyTorch, TensorFlow): For modern machine learning and computer vision tasks.

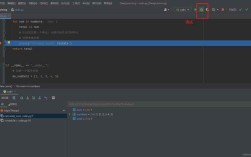

A. NumPy: From Scratch for Understanding

This is the best way to understand what's happening under the hood. We'll implement the sliding window operation ourselves.

import numpy as np

def numpy_convolution(image, kernel):

"""

Performs a 2D convolution using NumPy.

Assumes 'same' padding and a stride of 1.

"""

# Get dimensions

image_h, image_w = image.shape

kernel_h, kernel_w = kernel.shape

# Calculate output size for 'same' padding

output_h = image_h

output_w = image_w

# Create an output array filled with zeros

output = np.zeros((output_h, output_w))

# Pad the image to handle borders

# The padding size is (kernel_size - 1) / 2

pad_h = kernel_h // 2

pad_w = kernel_w // 2

padded_image = np.pad(image, ((pad_h, pad_h), (pad_w, pad_w)), mode='constant')

# Perform the convolution

for i in range(output_h):

for j in range(output_w):

# Get the current window from the padded image

window = padded_image[i:i+kernel_h, j:j+kernel_w]

# Element-wise multiplication and summation

output[i, j] = np.sum(window * kernel)

return output

# --- Example Usage ---

# A simple 5x5 image with a bright spot in the middle

image = np.array([

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 5, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0]

])

# A simple filter to detect the bright spot (an "identity" filter)

# It will just pass the center pixel through

kernel = np.array([

[0, 0, 0],

[0, 1, 0],

[0, 0, 0]

])

convolved_image = numpy_convolution(image, kernel)

print("Original Image:")

print(image)

print("\nConvolved Image:")

print(convolved_image)

Why is the output the same as the input?

Because our kernel is an "identity" kernel. It's designed to pick out the center pixel of its 3x3 window and ignore the rest. Since we padded the image, the center of the original image is always the center of the window, so its value is passed through.

B. SciPy: The Practical & Efficient Way

For most scientific computing tasks, you should use scipy.signal.convolve2d. It's highly optimized, handles padding modes automatically, and is much faster than the manual NumPy loop.

import numpy as np

from scipy.signal import convolve2d

# --- Example Usage ---

# Same image and kernel as before

image = np.array([

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 5, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0]

])

kernel = np.array([

[0, 0, 0],

[0, 1, 0],

[0, 0, 0]

])

# 'same' mode ensures the output has the same shape as the input

# 'boundary='fill' and 'fillvalue=0' is equivalent to zero-padding

convolved_image_scipy = convolve2d(image, kernel, mode='same', boundary='fill', fillvalue=0)

print("Original Image:")

print(image)

print("\nConvolved Image (using SciPy):")

print(convolved_image_scipy)

Key Parameters for convolve2d:

mode: How to handle the output size.'full': Output size is(image_h + kernel_h - 1, image_w + kernel_w - 1).'valid': Output size is(image_h - kernel_h + 1, image_w - kernel_w + 1). No padding is used.'same': Output size is the same as the input image. This is the most common choice.

boundary: How to handle the borders.'fill': Pads with a constant value (fillvalue).'wrap': Pads by wrapping the image around.'symm': Pads by reflecting the image across the edge.

C. PyTorch: For Deep Learning

In deep learning, especially with Convolutional Neural Networks (CNNs), you don't implement convolution manually. You use a framework like PyTorch, which provides highly optimized GPU-accelerated operations.

The key difference here is that PyTorch uses cross-correlation by default, which is mathematically almost identical to convolution (the kernel is just flipped, but for learning, it doesn't matter).

import torch

import torch.nn.functional as F

# --- Example Usage ---

# PyTorch expects inputs as Tensors with a batch dimension and channel dimension

# Shape: (batch_size, channels, height, width)

# Our image is grayscale (1 channel) and is one sample in a batch (batch_size=1)

image_tensor = torch.tensor([

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 5, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0]

], dtype=torch.float32).unsqueeze(0).unsqueeze(0) # Shape: (1, 1, 5, 5)

# The kernel also needs a channel dimension

# Shape: (out_channels, in_channels, kernel_height, kernel_width)

# We have 1 input channel and 1 output channel

kernel_tensor = torch.tensor([

[0, 0, 0],

[0, 1, 0],

[0, 0, 0]

], dtype=torch.float32).unsqueeze(0).unsqueeze(0) # Shape: (1, 1, 3, 3)

# Use F.conv2d

# padding=1 ensures 'same' padding for a 3x3 kernel

convolved_image_pt = F.conv2d(image_tensor, kernel_tensor, padding=1)

print("Original Image Tensor Shape:", image_tensor.shape)

print("Convolved Image Tensor Shape:", convolved_image_pt.shape)

# Convert back to numpy for printing

print("\nConvolved Image (using PyTorch):")

print(convolved_image_pt.squeeze().numpy())

Key Concepts in PyTorch:

- Tensors: All data is represented as Tensors.

F.conv2d: The main convolution function.- Padding (

padding=1): You specify the number of pixels to pad on each side. For a kernel of sizeK,padding = K // 2gives'same'output size. - Batch & Channel Dimensions: This is the standard for deep learning models. Even a single image must have these dimensions.

Summary: Which One to Use?

| Library | When to Use | Key Features |

|---|---|---|

| NumPy | Learning & Prototyping. When you need to understand the mechanics. | Manual implementation is great for learning. Slow for large data. |

| SciPy | Scientific Computing & Image Processing. For general-purpose, efficient 2D convolution. | Highly optimized, easy-to-use API (convolve2d), handles padding well. |

| PyTorch / TensorFlow | Deep Learning & Machine Learning. | GPU acceleration, automatic differentiation, integrates into neural network models. The standard for modern AI. |