Of course! statsmodels is a fundamental and powerful Python library for statistical modeling, hypothesis testing, and exploratory data analysis. It's the go-to library when you need to understand the underlying statistics of your model, not just make predictions.

Here’s a comprehensive guide covering what it is, why it's important, its core features, and how to use it with practical examples.

What is statsmodels?

statsmodels is a Python package that provides classes and functions for the estimation of many different statistical models, as well as for conducting statistical tests and exploring statistical data.

Its key differentiator from other machine learning libraries (like scikit-learn) is its statistical focus. While scikit-learn is excellent for predictive modeling and machine learning, statsmodels is designed for inference. It provides detailed statistical outputs like p-values, confidence intervals, R-squared, AIC/BIC, and more.

Why Use statsmodels?

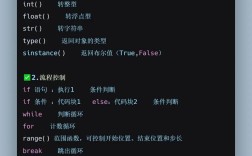

You should use `statsmodels when you need to:

- Understand your model's statistical properties: You want to know which variables are statistically significant, their effect size, and the uncertainty around your estimates.

- Test hypotheses: You need to perform formal statistical tests (e.g., t-tests, F-tests, chi-squared tests).

- Diagnose model fit: You need metrics to understand how well your model explains the data (e.g., R-squared, Adjusted R-squared, F-statistic).

- Explore relationships: You want to perform analysis of variance (ANOVA), or decompose time series data (e.g., using seasonal decomposition).

Key Features

statsmodels is organized into several modules, each catering to a different area of statistics:

statsmodels.regression.linear_model: Linear regression models, including Ordinary Least Squares (OLS), Generalized Least Squares (GLS), and more.statsmodels.tsa.api: Time series analysis, including ARIMA, VAR (Vector Autoregression), and seasonal decomposition.statsmodels.discrete.discrete_model: Models for discrete dependent variables, like Logistic Regression (Logit) and Probit Regression.statsmodels.genmod.generalized_linear_model: Generalized Linear Models (GLMs), an extension of linear regression for non-normal error distributions.statsmodels.stats: A wide array of statistical tests, power analysis, and multiple testing corrections.statsmodels.graphics: Tools for plotting statistical results, like regression plots, QQ-plots, and residual plots.statsmodels.formula.api: A user-friendly interface that allows you to specify models using R-like formulas (e.g.,y ~ x1 + x2).

Installation

You can install statsmodels using pip or conda:

# Using pip pip install statsmodels # Using conda conda install statsmodels

Practical Examples

Let's walk through a few common use cases.

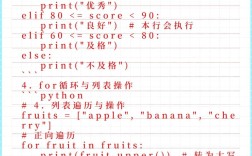

Example 1: Linear Regression (OLS)

This is the most common use case. We'll use the mtcars dataset, which is conveniently included in statsmodels.

import statsmodels.api as sm

import statsmodels.formula.api as smf

import pandas as pd

import numpy as np

# Load the mtcars dataset

mtcars = sm.datasets.get_rdataset("mtcars", "datasets").data

# Let's model miles per gallon (mpg) as a function of horsepower (hp) and weight (wt)

# Using the formula API (recommended for its readability)

model = smf.ols('mpg ~ hp + wt', data=mtcars)

# Fit the model

results = model.fit()

# Print the detailed summary

print(results.summary())

Output and Interpretation:

OLS Regression Results

==============================================================================

Dep. Variable: mpg R-squared: 0.827

Model: OLS Adj. R-squared: 0.815

Method: Least Squares F-statistic: 69.21

Date: ... Prob (F-statistic): 9.11e-12

Time: ... Log-Likelihood: -74.324

No. Observations: 32 AIC: 154.6

Df Residuals: 29 BIC: 159.3

Df Model: 2

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

Intercept 37.2273 1.878 19.828 0.000 33.395 41.060

hp -0.0318 0.009 -3.479 0.002 -0.051 -0.013

wt -3.8778 0.633 -6.129 0.000 -5.177 -2.579

==============================================================================

Omnibus: 1.326 Durbin-Watson: 2.646

Prob(Omnibus): 0.515 Jarque-Bera (JB): 0.614

Skew: 0.191 Prob(JB): 0.736

Kurtosis: 2.879 Cond. No. 17.6

==============================================================================Key things to look for in the summary:

R-squared(0.827): ~83% of the variance inmpgis explained byhpandwt. This is a measure of model fit.Adj. R-squared(0.815): Similar to R-squared but adjusts for the number of predictors. Better for comparing models with different numbers of predictors.F-statisticandProb (F-statistic): This tests the overall significance of the model. A low p-value (here,11e-12) means the model is statistically significant.coef(Coefficients): These are the estimated values for the intercept and the slopes (hp,wt).wtcoefficient is-3.8778. This means that for every one-unit increase in weight (wt),mpgdecreases by 3.8778 units, holdinghpconstant.

P>|t|(p-values for coefficients): These test the null hypothesis that the true coefficient is zero.- The p-values for

hp(0.002) andwt(0.000) are very low, meaning both are statistically significant predictors ofmpg.

- The p-values for

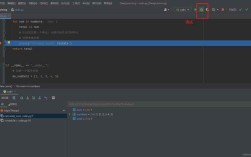

Example 2: Logistic Regression

Logistic regression is used when your dependent variable is binary (0 or 1).

# Use the same dataset, but create a binary variable for high/low mpg

mtcars['high_mpg'] = (mtcars['mpg'] > mtcars['mpg'].median()).astype(int)

# Model the probability of high_mpg based on horsepower (hp)

logit_model = smf.logit('high_mpg ~ hp', data=mtcars)

logit_results = logit_model.fit()

print(logit_results.summary())

Interpretation:

The coefficients are in log-odds. To interpret them more intuitively, you often exponentiate them to get Odds Ratios.

# Get the Odds Ratios print(np.exp(logit_results.params))

An odds ratio for hp of, for example, 95 would mean that for every one-unit increase in horsepower, the odds of having a high MPG car decrease by 5% (1 - 0.95).

Example 3: Time Series Analysis (Seasonal Decomposition)

statsmodels excels at time series analysis. Let's decompose a time series into its trend, seasonal, and residual components.

import matplotlib.pyplot as plt

from statsmodels.tsa.seasonal import seasonal_decompose

# Create a sample time series data

date_rng = pd.date_range(start='2025-01-01', end='2025-12-31', freq='M')

ts_data = np.sin(np.arange(len(date_rng)) * 0.5) * 10 + np.random.normal(loc=0, scale=5, size=len(date_rng))

ts_df = pd.DataFrame({'value': ts_data}, index=date_rng)

# Decompose the time series

# We assume a yearly seasonal period (frequency=12 for monthly data)

decomposition = seasonal_decompose(ts_df['value'], model='additive', period=12)

# Plot the decomposition

fig = decomposition.plot()

fig.set_size_inches(14, 7)

plt.show()

This will produce four plots:

- Observed: The original time series data.

- Trend: The long-term progression of the series.

- Seasonal: The periodic, cyclical patterns.

- Residual: What's left over after removing the trend and seasonal components (the "noise").

statsmodels vs. scikit-learn

This is a crucial distinction for any data scientist in Python.

| Feature | statsmodels |

scikit-learn |

|---|---|---|

| Primary Goal | Statistical Inference (understanding relationships) | Predictive Modeling (making accurate predictions) |

| Output | Detailed summaries with p-values, confidence intervals, R-squared. | Model objects with .predict() and .score() methods. |

| Focus | Understanding the "why" behind the model. | Optimizing for performance metrics (accuracy, MSE, etc.). |

| Typical Use | "Is variable X a significant predictor of Y?" | "What is the most accurate way to predict Y from X?" |

| Formula API | Excellent, R-like formulas (y ~ x1 + x2). |

Has sklearn.pipeline.Pipeline and ColumnTransformer for a similar feel. |

| Example Models | OLS, Logit, GLS, ARIMA |

LinearRegression, LogisticRegression, RandomForestRegressor, SVC |

In practice: They are often used together. You might use statsmodels to explore your data, select significant features, and understand the relationships. Then, you might use scikit-learn to build a final predictive model, perhaps using features selected during the statsmodels phase.