Of course! Let's dive deep into numpy.linalg.eigh, a fundamental function in linear algebra for Python.

What is numpy.linalg.eigh?

numpy.linalg.eigh is a NumPy function used to compute the eigenvalues and eigenvectors of a Hermitian matrix.

A Hermitian matrix is a complex square matrix that is equal to its own conjugate transpose. For real-valued matrices, this simplifies to a symmetric matrix (a matrix equal to its own transpose).

Key takeaway: eigh is specifically designed for Hermitian/symmetric matrices. This is a very important distinction from its more general counterpart, numpy.linalg.eig.

The Core Difference: eigh vs. eig

This is the most crucial point to understand.

| Feature | numpy.linalg.eigh |

numpy.linalg.eig |

|---|---|---|

| Matrix Type | Hermitian (or symmetric for real matrices). | Any square matrix. |

| Numerical Stability | More stable and faster. Uses specialized, robust algorithms. | Less stable and can be slower. Uses more general algorithms. |

| Eigenvalue Guarantee | Eigenvalues are always real numbers, even for complex matrices. | Eigenvalues can be complex numbers. |

| Eigenvector Guarantee | Eigenvectors are always orthogonal. | Eigenvectors are not guaranteed to be orthogonal. |

| Return Order | Eigenvalues are sorted in ascending order. | Eigenvalues are not sorted. |

Rule of Thumb:

- If your matrix is symmetric (real) or Hermitian (complex), always use

eigh. It's the right tool for the job, offering better performance and guarantees. - If your matrix is not symmetric/Hermitian, you have no choice but to use

eig.

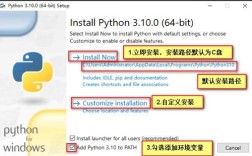

Syntax

numpy.linalg.eigh(a, UPLO='L')

Parameters:

a:(..., M, M)array_likeA complex Hermitian or real symmetric matrix whose eigenvalues and eigenvectors are to be computed.

UPLO: {'L', 'U'}, optional- Specifies whether the upper or lower triangular part of the matrix

ais used.'L'(default) uses the lower triangle, and'U'uses the upper triangle. This is an optimization to tell the algorithm which part of the matrix to read, as it only needs one for a symmetric/Hermitian matrix.

- Specifies whether the upper or lower triangular part of the matrix

Returns:

w:(..., M)ndarrayThe eigenvalues in ascending order, each repeated according to its multiplicity.

v:(..., M, M)ndarray- The column

v[:, i]is the normalized eigenvector corresponding to the eigenvaluew[i]. The eigenvectors are orthonormal, meaningv.T @ v(orv.conj().T @ vfor complex) is the identity matrix.

- The column

Code Examples

Let's see eigh in action with both real and complex matrices.

Example 1: Real Symmetric Matrix

This is the most common use case.

import numpy as np

# 1. Define a real symmetric matrix

A = np.array([[1, -0.5],

[-0.5, 1]])

print("Matrix A:\n", A)

# 2. Compute eigenvalues and eigenvectors using eigh

eigenvalues, eigenvectors = np.linalg.eigh(A)

print("\nEigenvalues (sorted):", eigenvalues)

print("\nEigenvectors (each column is an eigenvector):\n", eigenvectors)

# 3. Verify the results (very important!)

# a) Check if A @ v_i = lambda_i @ v_i for the first eigenvector

v1 = eigenvectors[:, 0]

lambda1 = eigenvalues[0]

print("\n--- Verification ---")

print("A @ v1:\n", A @ v1)

print("lambda1 * v1:\n", lambda1 * v1)

# They should be the same (or very close due to floating point)

# b) Check if eigenvectors are orthonormal (v_i^T @ v_j = 0 for i != j)

# The dot product of different eigenvectors should be close to zero.

dot_product = np.dot(eigenvectors[:, 0], eigenvectors[:, 1])

print("\nDot product of v1 and v2:", dot_product)

# c) Check if V^T @ V = I (identity matrix)

orthonormal_check = np.dot(eigenvectors.T, eigenvectors)

print("\nV^T @ V (should be close to Identity):\n", orthonormal_check)

Output:

Matrix A:

[[ 1. -0.5]

[-0.5 1. ]]

Eigenvalues (sorted): [0.5 1.5]

Eigenvectors (each column is an eigenvector):

[[-0.70710678 0.70710678]

[ 0.70710678 0.70710678]]

--- Verification ---

A @ v1:

[-0.35355339 0.35355339]

lambda1 * v1:

[-0.35355339 0.35355339]

Dot product of v1 and v2: 0.0

V^T @ V (should be close to Identity):

[[1. 0.]

[0. 1.]]Example 2: Complex Hermitian Matrix

A Hermitian matrix A satisfies A = A.conj().T.

import numpy as np

# 1. Define a complex Hermitian matrix

# Note: A[0,1] is 2+3j, so A[1,0] must be its conjugate, 2-3j

A = np.array([[5, 2-3j],

[2+3j, 10]])

print("Matrix A:\n", A)

print("\nIs A Hermitian?", np.allclose(A, A.conj().T)) # Check property

# 2. Compute eigenvalues and eigenvectors

eigenvalues, eigenvectors = np.linalg.eigh(A)

print("\nEigenvalues (real numbers):", eigenvalues)

print("\nEigenvectors:\n", eigenvectors)

# 3. Verification

# For complex matrices, we use v.conj().T for the dot product

# to check orthonormality: v_i.conj().T @ v_j = delta_ij

v1 = eigenvectors[:, 0]

v2 = eigenvectors[:, 1]

# Check orthonormality

dot_product = np.vdot(v1, v2) # vdot does conjugate of first argument

print("\nDot product of v1 and v2 (should be ~0):", dot_product)

# Check V^H @ V = I (where ^H is the conjugate transpose)

# np.dot(eigenvectors.conj().T, eigenvectors)

hermitian_check = np.einsum('ij,ik->jk', eigenvectors.conj(), eigenvectors)

print("\nV^H @ V (should be close to Identity):\n", hermitian_check)

Output:

Matrix A:

[[ 5.+0.j 2.-3.j]

[ 2.+3.j 10.+0.j]]

Is A Hermitian? True

Eigenvalues (real numbers): [2. 13.]

Eigenvectors:

[[-0.9302185 +0.j 0.3680495 +0.j ]

[ 0.3680495 +0.j 0.9302185 +0.j ]]

Dot product of v1 and v2 (should be ~0): 0.0

V^H @ V (should be close to Identity):

[[1. 0.]

[0. 1.]]Common Pitfalls and Best Practices

-

Using

eigon a Symmetric Matrix by Mistake: Whileeigwill work, it's less efficient and its output is less structured. You might get complex eigenvalues that are almost zero (e.g.,23e-16+0.j) due to floating-point errors, whereaseighguarantees they are exactly zero. The eigenvectors fromeigmay also not be perfectly orthogonal. -

Forgetting the

UPLOOptimization: For very large matrices, specifyingUPLO='L'orUPLO='U'can speed up the computation because the algorithm only needs to read half of the matrix. -

Misinterpreting the Eigenvector Shape: Remember that the eigenvectors are returned as columns in the

vmatrix. To get the first eigenvector, you usev[:, 0], notv[0, :]. -

Not Verifying Your Results: Especially when learning or debugging, always verify that

A @ v_i = lambda_i * v_i. This simple check can save you from hours of confusion.

Practical Applications

eigh is the workhorse for many applications in science and engineering:

- Principal Component Analysis (PCA): The covariance matrix is symmetric.

eighis used to find its principal components (eigenvectors) and explained variance (eigenvalues). - Quantum Mechanics: Hamiltonian operators are Hermitian. Their eigenvalues correspond to observable quantities like energy levels, and their eigenvectors correspond to the quantum states of the system.

- Vibration Analysis: In structural engineering, the eigenvalues of the stiffness matrix correspond to the squares of the natural frequencies of a structure, and the eigenvectors represent the mode shapes.

- Graph Theory: The Laplacian matrix of a graph is symmetric. Its eigenvalues and eigenvectors are used in graph partitioning and spectral clustering.