Of course! The Python Coverage API is a powerful way to measure, control, and analyze the code coverage of your tests programmatically. While the coverage command-line tool is great for quick checks, the API gives you fine-grained control, which is essential for integrating coverage into your CI/CD pipelines, custom testing runners, or complex development workflows.

Here's a comprehensive guide to the Python Coverage API, broken down into key concepts, common use cases, and detailed examples.

Core Concepts: The Coverage Class

The heart of the API is the coverage.Coverage class. This object manages the entire coverage process: starting and stopping measurement, erasing data, and generating reports.

Key Methods:

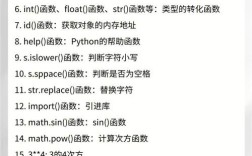

coverage.Coverage(...): The constructor. You can configure it here with options like data file paths, source directories, etc.coverage.start(): Starts recording code coverage.coverage.stop(): Stops recording. It's crucial to pair this withstart().coverage.save(): Saves the collected coverage data to the data file (e.g.,.coverage).coverage.erase(): Erases previously collected coverage data.coverage.combine(data_paths=None): Combines data from multiple files (useful for parallel test execution).coverage.report(...): Generates a text report to the console.coverage.html_report(...): Generates an HTML report in a specified directory.coverage.xml_report(...): Generates an XML report (great for CI tools like Jenkins/Coveralls).coverage.json_report(...): Generates a JSON report.

Basic Workflow: A Simple Example

This is the most common pattern: run some code, then generate a report.

Let's say you have a simple module to test:

my_module.py

def add(a, b):

"""Adds two numbers."""

return a + b

def subtract(a, b):

"""Subtracts b from a."""

return a - b

def divide(a, b):

"""Divides a by b."""

if b == 0:

raise ValueError("Cannot divide by zero")

return a / b

And a test script that uses the Coverage API:

test_with_api.py

import coverage

import my_module

# 1. Initialize the Coverage object

cov = coverage.Coverage()

# 2. Start measuring

cov.start()

# 3. Run your code (e.g., your tests)

print("Running tests...")

try:

result1 = my_module.add(2, 3)

print(f"add(2, 3) = {result1}")

result2 = my_module.subtract(5, 1)

print(f"subtract(5, 1) = {result2}")

# We will intentionally NOT call divide() to see it marked as uncovered

# result3 = my_module.divide(10, 2)

# print(f"divide(10, 2) = {result3}")

except Exception as e:

print(f"An error occurred: {e}")

# 4. Stop measuring

cov.stop()

# 5. Save the data

cov.save()

# 6. Generate reports

print("\nGenerating text report...")

# report(show_missing=True) will highlight lines not executed

cov.report(show_missing=True)

print("\nGenerating HTML report...")

# html_report creates a detailed report in a directory

cov.html_report(directory='htmlcov')

print("HTML report generated in 'htmlcov' directory.")

How to run it:

# Make sure you have coverage installed pip install coverage # Run the test script python test_with_api.py

Expected Output:

Running tests...

add(2, 3) = 5

subtract(5, 1) = 4

Generating text report...

Name Stmts Miss Cover Missing

----------------------------------------------

my_module.py 8 2 75% 14-15

Generating HTML report...

HTML report generated in 'htmlcov' directory.If you open htmlcov/index.html in your browser, you'll see a detailed, color-coded report of which lines were executed.

Common Use Cases & API Patterns

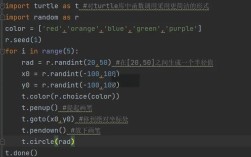

A. Using Coverage as a Context Manager

For simple scripts, a with block is cleaner and ensures coverage.stop() is even called if an error occurs.

import coverage

import my_module

with coverage.Coverage() as cov:

cov.start()

# Your code here

my_module.add(1, 2)

my_module.subtract(4, 3)

# cov.stop() is called automatically when exiting the 'with' block

# Now generate the report

cov.report()

B. Integrating with pytest

pytest has excellent built-in support for the Coverage API. You don't need to write the API calls yourself. You just need to install the right plugins.

-

Install

pytestandpytest-cov:pip install pytest pytest-cov

-

Run

pytestwith thecovplugin:# This will run tests and generate a terminal report pytest --cov=my_module # Generate an HTML report as well pytest --cov=my_module --cov-report=html

The pytest-cov plugin handles all the coverage.start() and coverage.stop() calls for you. It's the recommended way to use coverage with pytest.

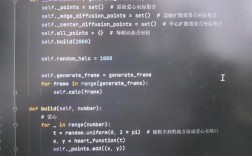

C. Combining Data from Parallel Execution (e.g., unittest)

When you run tests in parallel (e.g., using unittest's TestLoader.discover with multiple workers), each worker creates its own coverage data file. To get a complete picture, you must combine them.

Let's imagine you have a test suite that runs tests in parallel.

parallel_runner.py

import coverage

import unittest

import os

import sys

# Create a dummy test suite for demonstration

class MyTests(unittest.TestCase):

def test_addition(self):

import my_module

self.assertEqual(my_module.add(1, 1), 2)

def test_subtraction(self):

import my_module

self.assertEqual(my_module.subtract(5, 3), 2)

if __name__ == '__main__':

# Each parallel process needs its own Coverage object

# and should save data to a unique file.

cov = coverage.Coverage(data_file='.coverage.worker')

cov.start()

# Discover and run tests (this is a simplified parallel run)

# In a real scenario, you'd use a library like `pytest-xdist` or `unittest-parallel`

suite = unittest.TestLoader().loadTestsFromTestCase(MyTests)

unittest.TextTestRunner().run(suite)

cov.stop()

cov.save() # Save data for this specific worker

# The main process (or a final step) needs to combine the files

# This part would typically be run by a master process after all workers are done.

# For this example, we'll just combine them at the end.

print("Worker finished. Combining data...")

# Create a main Coverage object to combine into

main_cov = coverage.Coverage(data_file='.coverage')

main_cov.combine(data_paths=['.coverage.worker']) # List of files to combine

main_cov.save()

main_cov.report()

# Clean up the worker file

os.remove('.coverage.worker')

D. Conditional Reporting (e.g., only in CI)

You often want to generate reports only in your Continuous Integration (CI) environment, not on every developer's local machine.

import coverage

import os

import my_module

cov = coverage.Coverage()

# Start coverage for the code under test

cov.start()

my_module.add(10, 20)

cov.stop()

cov.save()

# Check if we're in a CI environment (common variables)

if os.environ.get('CI'):

print("Running in CI, generating coverage reports...")

cov.xml_report(outfile='coverage.xml') # Jenkins, etc.

cov.html_report(directory='cov_html') # For artifacts

else:

print("Not in CI, skipping report generation.")

Important Configuration Options

You can configure the Coverage object in its constructor.

source: A list of directories or modules to measure. This is crucial for large projects. It tells coverage to only care about code within these paths.# Only measure code within the 'src' directory cov = coverage.Coverage(source=['src'])

omit: A list of file patterns to exclude from measurement.# Exclude common test and virtual environment directories cov = coverage.Coverage(omit=['*/tests/*', '*/venv/*', '*/migrations/*'])

data_file: The path to the coverage data file (defaults to.coverage).# Store data in a specific file cov = coverage.Coverage(data_file='.coverage.project_x')

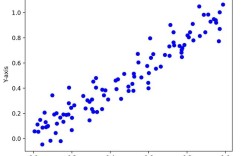

Advanced API: Analyzing Coverage Data

Sometimes you don't just want a report; you want to programmatically inspect the results.

import coverage

import my_module

# 1. Run and save coverage data

cov = coverage.Coverage()

cov.start()

my_module.add(1, 2)

my_module.subtract(5, 3)

# Intentionally don't call my_module.divide()

cov.stop()

cov.save()

# 2. Analyze the saved data

# Get a summary of all measured files

summary = cov.get_data().summary()

print("Summary of covered files:")

for filename, lines in summary.items():

print(f" - {filename}: {lines} lines measured")

# Get a detailed analysis of a specific file

data = cov.get_data()

filename = 'my_module.py'

measured_lines = data.lines(filename)

executable_lines = data._analyze(filename).executable

print(f"\nFor {filename}:")

print(f" Lines executed: {measured_lines}")

print(f" Total executable lines: {executable_lines}")

print(f" Missing lines: {set(executable_lines) - set(measured_lines)}")

This output would look like:

Summary of covered files:

- my_module.py: 6 lines measured

For my_module.py:

Lines executed: [1, 5, 8, 10, 11]

Total executable lines: [1, 5, 8, 10, 11, 14]

Missing lines: {14}