Of course! Using Python with Apache Spark on a Linux system is a very common and powerful combination for big data processing. This guide will walk you through the entire process, from setup to running your first application, covering key concepts and best practices.

Here's a breakdown of what we'll cover:

- Core Concepts: What is Spark, and why use Python with it on Linux?

- Prerequisites: What you need installed before you start.

- Installation: Step-by-step guide to installing Spark and PySpark.

- Running Spark: Three ways to run PySpark (Interactive, Script, and on a Cluster).

- Code Example: A simple "Word Count" to see it in action.

- Best Practices: Tips for writing efficient and robust Spark code.

Core Concepts: Spark, Python, and Linux

- Apache Spark: A fast, in-memory, cluster-computing framework designed for processing large datasets. It's an engine for big data processing.

- Python (PySpark): The Python API for Spark. It allows you to write Spark applications using Python syntax, which is often preferred for its simplicity and rich ecosystem of data science libraries (like Pandas, NumPy, Scikit-learn).

- Linux: The standard operating system for most big data clusters (Hadoop, YARN, Kubernetes) and the recommended environment for serious Spark development due to its stability and performance.

Why this combination is so popular:

- Ease of Use: Python is easier to learn and use than Java or Scala.

- Rapid Prototyping: You can quickly test ideas in an interactive shell.

- Ecosystem Integration: Seamless integration with libraries like Pandas via

pyspark.pandasand machine learning libraries like MLlib. - Scalability: Your Python code can run on a single laptop or scale out to a cluster of thousands of Linux machines without changing the core logic.

Prerequisites

Before installing Spark, ensure you have the following on your Linux machine (e.g., Ubuntu, CentOS, Rocky Linux):

-

Java Development Kit (JDK): Spark is written in Scala, which runs on the Java Virtual Machine (JVM). You need a JDK installed.

(图片来源网络,侵删)

(图片来源网络,侵删)- Check:

java -version - Install (for Ubuntu/Debian):

sudo apt update && sudo apt install openjdk-11-jdkoropenjdk-17-jdk. (Spark 3.3+ works best with JDK 11 or 17).

- Check:

-

Python: Python 3 is highly recommended.

- Check:

python3 --version - Install (for Ubuntu/Debian):

sudo apt install python3 python3-pip

- Check:

-

pip: The Python package installer.

- Check:

pip3 --version - Install (if missing):

sudo apt install python3-pip

- Check:

Installation: Setting Up Spark and PySpark

We'll install Spark locally on your machine. This setup is called Standalone Mode and is perfect for learning and development.

Step 1: Download Apache Spark

- Go to the Apache Spark download page.

- Choose a Spark release (a recent stable version is best).

- Select a package type (Pre-built for Hadoop is fine).

- Click the link to download the

.tgzfile. - Move the downloaded file to your home directory or

/optand extract it.

# Example for Spark 3.5.1 cd ~ wget https://archive.apache.org/dist/spark/spark-3.5.1/spark-3.5.1-bin-hadoop3.tgz # Extract the archive tar -xvzf spark-3.5.1-bin-hadoop3.tgz # (Optional) Create a symbolic link for easier access sudo ln -s ~/spark-3.5.1-bin-hadoop3 /opt/spark

Step 2: Set Environment Variables

You need to tell your system where to find Spark and Java. Add the following lines to your shell's configuration file (e.g., ~/.bashrc or ~/.zshrc).

# Open the file with a text editor nano ~/.bashrc # Add these lines to the end of the file export SPARK_HOME=/opt/spark export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin # Set JAVA_HOME (adjust path if your JDK is installed elsewhere) export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64 # Apply the changes to your current terminal session source ~/.bashrc

Step 3: Verify the Installation

Check if the spark-shell command is available.

spark-shell --version

You should see output detailing the Spark version.

Step 4: Install PySpark Library

While Spark comes with PySpark, it's best to manage it as a Python package.

pip3 install pyspark

Running PySpark: Three Common Ways

Method 1: The Interactive Shell (PySpark Shell)

This is the best way to explore data and test transformations interactively.

-

Launch the shell. You can specify the amount of memory and CPU cores to use.

pyspark --master local[2] --driver-memory 2g

--master local[2]: Run Spark locally using 2 cores.local[*]uses all available cores.--driver-memory 2g: Allocate 2GB of memory to the driver program.

-

You'll see a

scala>prompt. You can now run Python code!>>> # Create a SparkSession >>> spark = SparkSession.builder.appName("MyFirstApp").getOrCreate() >>> # Create a simple RDD (Resilient Distributed Dataset) >>> data = [1, 2, 3, 4, 5] >>> distData = spark.sparkContext.parallelize(data) >>> # Perform an action: count the elements >>> distData.count() 5 >>> # Perform a transformation: square each element >>> squared = distData.map(lambda x: x * x) >>> squared.collect() [1, 4, 9, 16, 25] >>> # Don't forget to stop the session >>> spark.stop()

Method 2: Running a Python Script

For more complex applications, you write your code in a .py file and submit it to Spark using spark-submit.

-

Create a Python file, e.g.,

my_app.py:# my_app.py from pyspark.sql import SparkSession # Create a SparkSession spark = SparkSession.builder \ .appName("PythonWordCount") \ .getOrCreate() # Create a sample RDD lines = spark.sparkContext.textFile("file:///opt/spark/README.md") counts = lines.flatMap(lambda x: x.split(' ')) \ .map(lambda x: (x, 1)) \ .reduceByKey(lambda x, y: x + y) # Save the output to a directory output = "/tmp/wordcount_output" counts.saveAsTextFile(output) print(f"Word count results saved to {output}") spark.stop() -

Submit the script to the Spark cluster (or local instance):

spark-submit --master local[4] my_app.py

--master local[4]: Use 4 local cores.

-

Check the output:

# The output directory will contain part-xxxxx files ls -l /tmp/wordcount_output cat /tmp/wordcount_output/part-00000

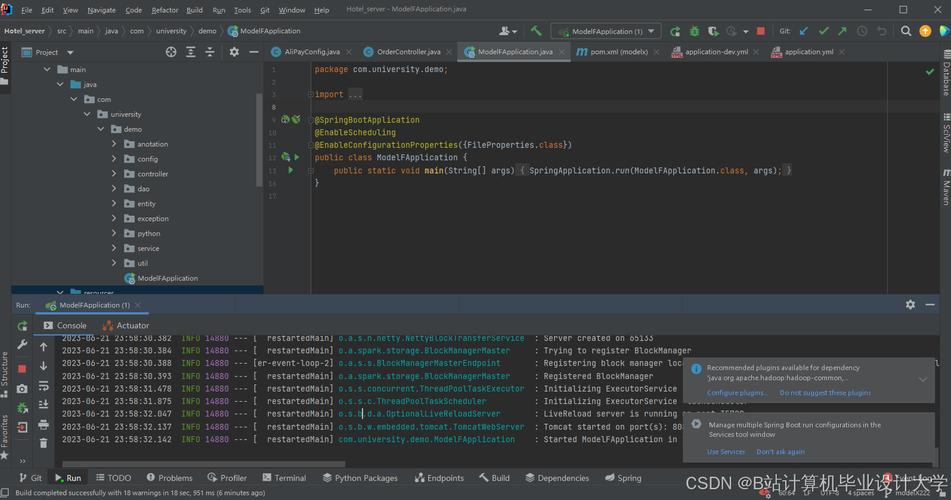

Method 3: Running on a Cluster (YARN, Kubernetes)

For production, you don't run Spark in local mode. You submit jobs to a cluster resource manager like YARN (common in Hadoop ecosystems) or Kubernetes.

The command is similar, but you change the master URL.

-

On YARN:

spark-submit --master yarn --deploy-mode cluster my_app.py

--deploy-mode cluster: The driver runs on the cluster, not your local machine. This is safer for production.

-

On a Standalone Cluster:

spark-submit --master spark://<master-node-ip>:7077 my_app.py

Code Example: Word Count with DataFrames (Modern API)

While RDDs are fundamental, the DataFrame and Dataset API is the preferred, more optimized way to work with structured data in modern Spark.

Here's the Word Count example using the DataFrame API.

# wordcount_dataframe.py

from pyspark.sql import SparkSession

from pyspark.sql.functions import split, col, lower

# Create a SparkSession

spark = SparkSession.builder \

.appName("DataFrameWordCount") \

.getOrCreate()

# Create a DataFrame from a text file

# The .option("header", "false") is important for text files

df = spark.read.text("file:///opt/spark/README.md")

# Perform transformations using Spark SQL functions

# 1. Convert to lowercase

# 2. Split the "value" column into an array of words

# 3. Explode the array to get one word per row

words_df = df.select(

explode(

split(lower(col("value")), " ")

).alias("word")

)

# 4. Group by word and count

word_count_df = words_df.groupBy("word").count()

# Show the results (action)

word_count_df.show(20, truncate=False)

# Stop the SparkSession

spark.stop()

To run this:

spark-submit --master local[4] wordcount_dataframe.py

Best Practices

- Use the DataFrame API: It's more performant (due to the Catalyst query optimizer) and easier to use than RDDs for most structured data tasks.

- Define Schemas: When reading data from sources like CSV or JSON, explicitly define a schema. This saves Spark the time of inferring it and prevents errors.

- Filter Early: Use

.filter()as early as possible in your transformations to reduce the amount of data processed in subsequent steps. - Avoid UDFs (User-Defined Functions) When Possible: They are slow because they break Spark's optimizations. Use built-in Spark SQL functions. If you must use a UDF, try to make them as simple as possible.

- Be Mindful of

collect(): Thecollect()action pulls all data from the cluster to the driver. Only use it on small datasets. For large results, use.write.save()to save the data to a distributed file system (like HDFS or S3). - Monitor Your Application: Use the Spark UI, which is available by default at

http://<driver-node>:4040when you run a local job. It's an invaluable tool for understanding performance bottlenecks (e.g., shuffles, slow tasks).