Of course. The "GBK" error in pymssql is a very common issue, especially for developers working with Chinese applications. It's a classic character encoding problem.

Here’s a detailed breakdown of why it happens and how to fix it.

The Root Cause: Character Encoding Mismatch

The error UnicodeEncodeError: 'gbk' codec can't encode character... happens because there's a mismatch between the Python script's internal character representation and the encoding expected by the database or the system.

-

Python 3 and Unicode: By default, Python 3 uses Unicode for all strings. A string like

你好,世界is stored internally as a sequence of Unicode code points. This is universal and can represent any character. -

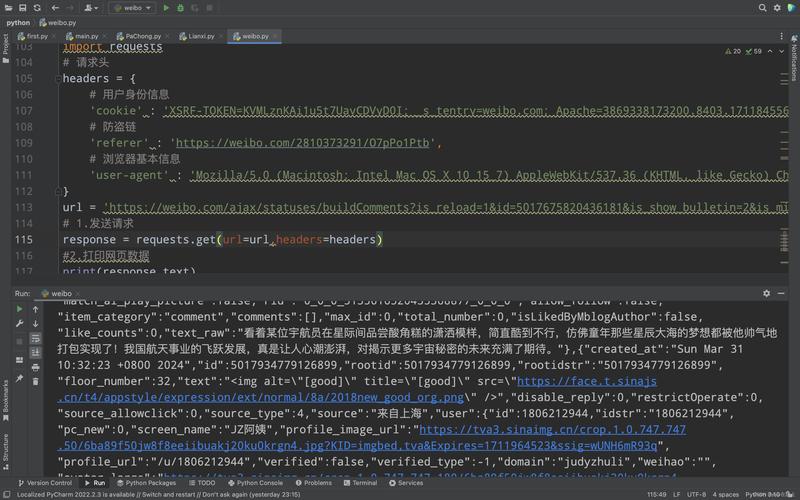

The Database Connection: When you send data from your Python script to the Microsoft SQL Server database using

pymssql, the library needs to convert your Python string into a byte stream. This process is called encoding. (图片来源网络,侵删)

(图片来源网络,侵删) -

The

GBKProblem: By default, on a Chinese Windows system, the system's default encoding is oftenGBK.pymssqlmight inherit this system-wide default if you don't specify an encoding explicitly. When it tries to encode your Unicode string (e.g., containing Chinese characters) into theGBKcodec, it fails becausepymssqlis trying to use the system's default encoding, which isGBKon a Chinese Windows machine. The character might not exist in theGBKcharacter set, or the library might be incorrectly trying to encode it for the system console instead of the database connection.

Solution 1: The Best Practice (Explicitly Set charset)

The most reliable and correct way to solve this is to explicitly tell pymssql which encoding to use for the connection. For modern applications dealing with Chinese text, utf-8 is the universal standard.

When you create your connection object, add the charset parameter.

import pymssql

# --- Configuration ---

# Replace with your actual server details

server = 'your_server_name'

user = 'your_username'

password = 'your_password'

database = 'your_database_name'

try:

# KEY CHANGE: Add charset='utf-8' to the connect() call

conn = pymssql.connect(

server=server,

user=user,

password=password,

database=database,

charset='utf-8' # <--- THIS IS THE FIX

)

print("Successfully connected to the database!")

cursor = conn.cursor(as_dict=True) # as_dict=True makes fetching rows as dictionaries easier

# Example: Inserting data with Chinese characters

chinese_name = '张三'

insert_query = "INSERT INTO Employees (Name, Department) VALUES (%s, 'IT')"

cursor.execute(insert_query, chinese_name)

conn.commit()

print(f"Successfully inserted: {chinese_name}")

# Example: Fetching data with Chinese characters

cursor.execute("SELECT Name, Department FROM Employees WHERE Name = %s", chinese_name)

employee = cursor.fetchone()

if employee:

print(f"Fetched employee: {employee['Name']} from {employee['Department']}")

else:

print("Employee not found.")

except Exception as e:

print(f"An error occurred: {e}")

finally:

if 'conn' in locals() and conn:

conn.close()

print("Database connection closed.")

Why this works: By setting charset='utf-8', you are explicitly instructing pymssql to use the UTF-8 encoding for all data sent to and received from the database. This bypasses any system-specific defaults (like GBK) and ensures consistent behavior across different environments.

Solution 2: Ensure Your SQL Server is Configured for UTF-8

Setting charset='utf-8' in Python is only half the battle. Your SQL Server instance itself must also be configured to handle UTF-8 data correctly.

-

Database Collation: The collation of your database (and columns) defines how data is sorted and stored. While modern SQL Server versions have better UTF-8 support, it's crucial to ensure your columns that store Chinese text are using a compatible collation.

- Good collations often end in

_CI_AS(Case-Insensitive, Accent-Sensitive) and are based on Unicode, likeSQL_Latin1_General_CP1_CI_AS(a common default) orChinese_PRC_CI_AS. - Important Note: For true UTF-8 storage in all versions, you should use

NVARCHARinstead ofVARCHARfor your text columns.NVARCHARstores data in UTF-16 (a Unicode format) and is designed to handle international characters.

- Good collations often end in

-

SQL Server Version: Full, native support for UTF-8 encoding was introduced in SQL Server 2025 (v15.0). If you are using an older version,

pymssql'scharsetparameter might behave differently, andNVARCHARbecomes even more critical.

Example Table Creation:

-- Use NVARCHAR for columns that will store Chinese or other international text

CREATE TABLE Employees (

ID INT PRIMARY KEY IDENTITY(1,1),

Name NVARCHAR(100), -- Use NVARCHAR!

Department NVARCHAR(50) -- Use NVARCHAR!

);

Solution 3: Handle Output Encoding (Console/Files)

Sometimes the error might not be during the database operation but when you try to print the result to the console or write it to a file.

If your Windows console is not configured to display UTF-8, printing a UTF-8 string can cause an error.

# This might fail if your console doesn't support UTF-8

employee = {'Name': '李四', 'Department': 'HR'}

print(employee) # Might raise a UnicodeEncodeError

Fix for Console Output:

You can temporarily change the console's output encoding for your script's execution.

import sys

import io

# Redirect stdout to use UTF-8 encoding

sys.stdout = io.TextIOWrapper(sys.stdout.buffer, encoding='utf-8')

# Now this should work

employee = {'Name': '李四', 'Department': 'HR'}

print(employee) # Should print correctly: {'Name': '李四', 'Department': 'HR'}

For file output, always open the file with explicit UTF-8 encoding:

# Correct way to write to a file

with open('output.txt', 'w', encoding='utf-8') as f:

f.write("你好,世界!\n")

f.write(employee['Name'])

Summary and Best Practices

-

Always Specify

charset='utf-8': This is the most important step. Make it a habit when callingpymssql.connect().conn = pymssql.connect(..., charset='utf-8')

-

Use

NVARCHARin SQL Server: For any column that needs to store international characters (like Chinese), use theNVARCHARdata type instead ofVARCHAR. This is the most robust way to ensure data integrity in the database. -

Check Your Environment: Be aware of your system's default encoding (you can check it with

import sys; print(sys.getdefaultencoding())). If it'sgbkand you're on Windows, Solution 1 is essential to avoid conflicts. -

Handle Output: If you need to print to the console or write to files, explicitly specify

encoding='utf-8'to preventUnicodeEncodeErrorat the final stage.