Of course! Let's break down what an SAE (Sparse AutoEncoder) channel is in the context of Python and machine learning.

This is a concept that became very popular with the rise of Interpretability in Large Language Models (LLMs), particularly from research like the Circuits Thread from Anthropic.

The Core Idea: What is an SAE Channel?

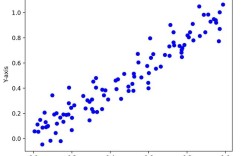

At its simplest, an SAE channel is a single, interpretable "feature" that a Sparse AutoEncoder has learned to represent within a neural network.

Let's break that down:

- Channel: In a neural network, a "channel" usually refers to a single vector of activations from one neuron or one feature map in a layer. For example, a layer with 4096 neurons has 4096 channels. An SAE takes one of these original channels (or more commonly, the entire activation vector of a layer) and maps it to a new, larger set of channels.

- Sparse AutoEncoder (SAE): An SAE is a type of autoencoder trained to reconstruct the input activations of a specific layer in a pre-trained model (like an LLM). Its key innovation is sparsity. It's forced to use only a small number of its "latent" neurons to represent any given input. This forces the SAE to learn highly specific and interpretable features.

- Feature: A feature is a specific pattern or concept. For example, in the context of an LLM, a feature might fire when the model sees the word "king", or when it's thinking about a specific programming concept, or when it detects syntactic structure.

So, an SAE channel is one of these sparse, interpretable "feature detectors" that the SAE learns.

The Analogy: The Radio Dial

Think of the original, pre-trained LLM as a complex radio that receives a signal (your text input) and produces a complex output of activations.

- Original LLM Activations: The raw, complex "static" from the radio. It's powerful but hard to understand. You can't easily tell what song is playing just by looking at the static.

- SAE: You build a special radio tuner (the SAE) that takes this static and separates it into individual radio stations.

- SAE Channel: Each "channel" on your new tuner is a single, clear radio station. One station might only play classical music (the "royalty" feature). Another might only play rock music (the "Python code" feature). Each channel is highly specific and interpretable on its own.

The "sparsity" part means that for any given song (input text), only a very small number of these stations will be active (have a high signal strength).

Why is this Important? The "Why"

SAEs are a key tool in mechanistic interpretability. The goal is to reverse-engineer how LLMs work. Instead of treating them as a black box, we want to understand the internal "circuits" they use to think.

By training an SAE on an LLM's activations, we can:

- Discover Features: We can find out what the model's internal "concepts" are. Do the neurons correspond to objects, actions, emotions, or abstract ideas?

- Understand Computation: We can see how these features combine to perform tasks. For example, to answer a question, the model might activate a "question" feature, a "relevant-knowledge" feature, and a "generate-answer" feature.

- Debug and Improve Models: If we find a feature that is associated with biased or toxic output, we can potentially intervene to remove or change it.

- Locate Knowledge: We can pinpoint exactly in the model's vast network a specific piece of knowledge is stored (e.g., "the capital of France is Paris").

The "Python" Part: How to Implement It

Working with SAEs in Python involves a few key libraries and steps. The ecosystem is still evolving, but the main players are transformers (from Hugging Face) and torch (PyTorch).

Key Libraries:

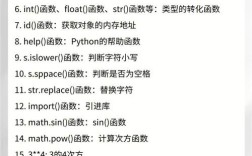

torch: The core deep learning framework for building and training the SAE.transformers(by Hugging Face): Essential for loading the pre-trained LLM (e.g., GPT-2, Llama) and getting its activations.datasets(by Hugging Face): For easily loading and processing text datasets to train the SAE.sae-lens(by Neel Nanda): A fantastic, specialized library that simplifies the process of training and analyzing SAEs on models like GPT-2 and GPT-J. This is highly recommended for getting started.circuitsvis: A library for visualizing the features discovered by SAEs (e.g., showing which text inputs activate a feature the most).

High-Level Workflow in Python:

Here's a step-by-step guide to the process.

Step 1: Setup and Installation

pip install torch transformers datasets sae-lens circuitsvis

Step 2: Load the Pre-trained LLM

You need a base model to get activations from. sae-lens makes this very easy.

from sae_lens import SAE, HookedSAETransformer

# Load a pre-trained model, for example, GPT-2 Small

# This also loads the SAEs trained on it

model = HookedSAETransformer.from_pretrained("gpt2-small-residual-pre")

# You can now access the SAE for a specific layer

# For example, the SAE trained on layer 10 of GPT-2

sae = model.sae_layer_dict[10]

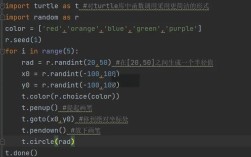

Step 3: Get Activations and Use the SAE

Now you can pass text through the model and use the SAE to analyze the activations.

# Get some text

text = "The king ruled the kingdom with a wise and just hand."

# Get the activations from a specific layer (e.g., layer 10)

# The model.run_with_cache() is a common way to do this

_, cache = model.run_with_cache(text, stop_at_layer=11) # Stop before layer 11 to get layer 10

# The activations for layer 10 are in the cache

layer_10_activations = cache["resid_pre", 10] # Shape: [batch_size, seq_len, d_model]

# Use the SAE to get the sparse feature activations

# The SAE encoder maps the dense activations to a sparse feature vector

sparse_acts = sae.encode(layer_10_activations) # Shape: [batch_size, seq_len, n_sae_features]

# Let's look at the features for the first token ("The")

token_sparse_acts = sparse_acts[0, 0, :] # Shape: [n_sae_features]

# Find the most active features for this token

top_k_features = torch.topk(token_sparse_acts, k=5)

print(f"Top 5 active feature indices for token 'The': {top_k_features.indices}")

print(f"Top 5 activation values: {top_k_features.values}")

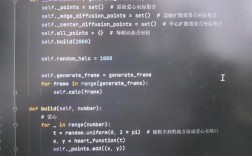

Step 4: Interpret the Features

The indices from the previous step (e.g., tensor([15234, 8976, ...])) are your SAE channels. To understand what they mean, you need to do feature visualization.

The standard method is to use a large dataset of text, see which examples maximally activate each feature, and then inspect those examples.

sae-lens and circuitsvis have tools to help with this.

from sae_lens import ActivationsLoader

from circuitsvis import *

# Create an activation loader to find examples that activate a feature

activation_loader = ActivationsLoader.from_sae(model, sae, batch_size=64)

# Let's analyze feature 15234 (from our example above)

feature_idx = 15234

# Get the top 10 examples that activate this feature the most

top_acts_examples = activation_loader.get_top_activations(feature_idx, k=10)

# Visualize it using circuitsvis

# This will show you the text snippets and the activation score

# for the feature

html_feature_vis = render_feature_html(

feature_acts=top_acts_examples.feature_acts,

tokens=top_acts_examples.tokens,

)

# Display the visualization (in a Jupyter Notebook, for example)

html_feature_vis

When you run this, circuitsvis will produce a visualization showing you the text snippets that caused this SAE channel (feature) to fire the most. If the feature is interpretable, you'll see a clear pattern. For example, if it's the "royalty" feature, you might see snippets with "king", "queen", "prince", "throne", etc.

Summary

| Term | Meaning in Python Context |

|---|---|

| SAE Channel | A single neuron in the latent space of a Sparse AutoEncoder. It represents one specific, interpretable feature that the model has learned. |

| Python Workflow | Load a pre-trained LLM and its corresponding SAE (e.g., using sae-lens). 2. Pass text through the model to get internal activations. 3. Use the SAE's encode() method to get sparse feature activations. 4. Identify highly active features (channels). 5. Use a visualization tool (like circuitsvis) to interpret what those features represent. |