Of course! Let's dive into shared_array in Python.

The term "shared array" isn't a single, built-in Python object. Instead, it refers to a collection of techniques and libraries designed to solve a specific problem: sharing data between different processes or threads efficiently and safely.

In Python, due to the Global Interpreter Lock (GIL), threads are limited by a bottleneck for CPU-bound tasks. Therefore, the most common and powerful use case for shared arrays is in multiprocessing—where you want multiple processes to work on the same block of data without creating expensive copies.

Here’s a breakdown of the main ways to achieve this, from the most fundamental to the most user-friendly.

The Core Problem: Why Not Just Use a Normal List or NumPy Array?

When you start a new process in Python (e.g., with multiprocessing.Process), it gets its own memory space. If you pass a large list or NumPy array to it, Python doesn't magically share the data. Instead, it pickles (serializes) the data, sends it over an inter-process communication (IPC) channel, and unpickles it in the new process. This creates a complete copy of the data in memory.

For a small array, this is fine. For a large array (e.g., a 1000x1000 image or a large simulation dataset), this is incredibly slow and memory-intensive.

Shared arrays solve this by placing the data in a special region of memory that all processes can access directly, without copying.

Solution 1: The Classic multiprocessing.Array (Low-Level)

This is the fundamental building block provided by Python's standard multiprocessing library. It's very flexible but also low-level, meaning you have to manage the data type and synchronization yourself.

How it Works:

You create a shared array with a specific type code (like 'd' for a double/float, 'i' for an integer) and a size. The array is backed by shared memory.

Key Features:

- Type-Specific: You must declare the data type upfront (e.g.,

'i'for int,'f'for float,'d'for double). - 1-Dimensional: It's inherently a one-dimensional array.

- Requires Synchronization: If multiple processes will both read and write, you must use a

Lockto prevent race conditions. Otherwise, you might get corrupted data. - Raw Data: It's a C-style array. To perform complex operations (like slicing or matrix math), you'll almost always need to convert it to a NumPy array.

Example:

Let's create a shared array of 5 integers and have two processes work on it.

import multiprocessing

import time

def square_numbers(arr, lock):

"""

A worker function that squares the numbers in the shared array.

"""

for i in range(len(arr)):

with lock: # Acquire the lock before modifying the array

val = arr[i]

# Simulate some work

time.sleep(0.1)

arr[i] = val * val

print(f"Process {multiprocessing.current_process().pid}: Squared {val} to {arr[i]}")

if __name__ == "__main__":

# 1. Create a shared array of 5 integers ('i')

# The 'lock=True' argument creates a multiprocessing.Lock object for us.

shared_arr = multiprocessing.Array('i', [1, 2, 3, 4, 5], lock=True)

# 2. Create a lock for manual synchronization (though lock=True does this)

# lock = multiprocessing.Lock()

# 3. Create and start two processes

p1 = multiprocessing.Process(target=square_numbers, args=(shared_arr, shared_arr.get_lock()))

p2 = multiprocessing.Process(target=square_numbers, args=(shared_arr, shared_arr.get_lock()))

p1.start()

p2.start()

# 4. Wait for both processes to finish

p1.join()

p2.join()

# 5. Print the final result from the main process

print("\nFinal state of the shared array:")

print(list(shared_arr))

Output (will vary slightly due to timing):

Process 12345: Squared 1 to 1

Process 12346: Squared 2 to 4

Process 12345: Squared 3 to 9

Process 12346: Squared 4 to 16

Process 12345: Squared 5 to 25

Final state of the shared array:

[1, 4, 9, 16, 25]Notice how the lock ensures that one process modifies a value at a time, preventing a "race condition".

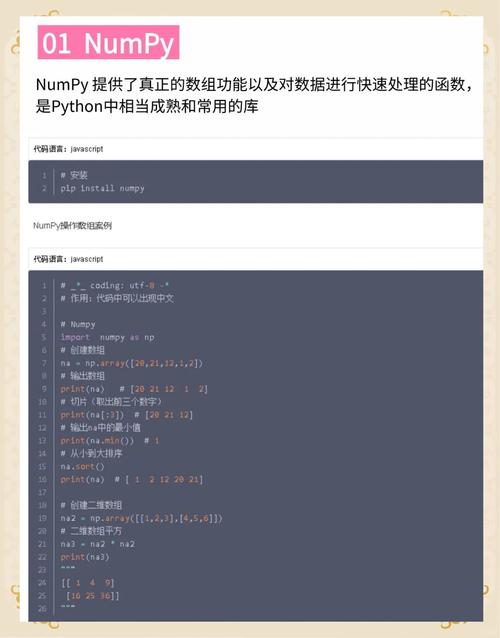

Solution 2: The NumPy Way (multiprocessing.shared_memory) - Recommended

For any serious numerical work, the best approach is to use NumPy's shared memory functionality, introduced in Python 3.8. It's the modern, high-performance standard.

How it Works:

It creates a SharedMemory block, which is a raw memory region. You can then create multiple NumPy arrays that all view this same block of memory. They can have different shapes and data types, but they all point to the same underlying data.

Key Features:

- NumPy Native: Works seamlessly with NumPy arrays. You can slice, reshape, and perform vectorized operations.

- Flexible: You can create arrays of different shapes and types from the same shared memory block.

- Efficient: No data copying occurs after the initial shared memory block is created.

- Requires Cleanup: This is crucial. The creator of the shared memory block is responsible for calling

.unlink()when it's done, or it can lead to memory leaks on the host system.

Example:

This example shows how to create a shared memory block, attach to it from different processes, and modify the data.

import multiprocessing

import numpy as np

def worker(data):

"""

Worker function that modifies the shared NumPy array.

"""

# The 'data' argument is a numpy array that views the shared memory

print(f"Process {multiprocessing.current_process().pid} sees initial sum: {np.sum(data)}")

# Modify the array in-place

data *= 2

print(f"Process {multiprocessing.current_process().pid} sees sum after modification: {np.sum(data)}")

if __name__ == "__main__":

# 1. Create a NumPy array

original_array = np.arange(10)

print(f"Original array in main process: {original_array}")

# 2. Create a shared memory block from the NumPy array

# This copies the data into the shared memory block.

shm = multiprocessing.shared_memory.SharedMemory(create=True, size=original_array.nbytes)

# 3. Create a new NumPy array that points to the shared memory

shared_array = np.ndarray(original_array.shape, dtype=original_array.dtype, buffer=shm.buf)

# Copy data from the original array to the shared memory-backed array

np.copyto(shared_array, original_array)

print(f"Shared array created in main process: {shared_array}\n")

# 4. Create and start processes, passing the *name* of the shared memory

# Note: We pass the name, not the object itself, as it must be picklable.

p1 = multiprocessing.Process(target=worker, args=(shared_array,))

p2 = multiprocessing.Process(target=worker, args=(shared_array,))

p1.start()

p2.start()

p1.join()

p2.join()

# 5. Check the result in the main process

print("\nFinal state of the shared array after workers:")

print(shared_array) # Will show [0, 2, 4, 6, 8, 10, 12, 14, 16, 18]

# 6. CRITICAL: Clean up the shared memory

# Both the shared_array and shm should be closed and unlinked.

# The unlink call is what tells the OS to free the memory block.

shm.close()

shm.unlink()

print("\nShared memory has been unlinked.")

Solution 3: High-Level Libraries (Zarr, Dask)

For very large datasets that don't even fit in a single machine's RAM, you use libraries that are built from the ground up for sharing and out-of-core computation.

- Zarr: Stores arrays in a directory of small chunks on disk. These chunks can be read and written by multiple processes (or even machines) in parallel. It's like a shared array that lives on your hard drive.

- Dask: Builds on top of NumPy and Pandas to create "virtual" arrays that are broken into chunks. Dask schedules computations on these chunks across multiple cores or machines, coordinating everything for you. It can use Zarr as a backend for truly massive datasets.

These are the go-to solutions for big data, but they are more complex to set up than multiprocessing.shared_memory.

Summary: Which One Should I Use?

| Method | Best For | Pros | Cons |

|---|---|---|---|

multiprocessing.Array |

Simple, low-level data sharing (e.g., lists of integers/floats). | Part of the standard library, very basic control. | Low-level, requires manual type/sync, not NumPy-friendly. |

multiprocessing.shared_memory |

The recommended choice for NumPy users. High-performance numerical computing. | Seamless with NumPy, highly efficient, flexible. | Requires manual cleanup (.unlink()), Python 3.8+. |

| Zarr / Dask | Big Data. Datasets larger than RAM, distributed computing. | Handles massive datasets, parallel by design. | More complex setup, not for simple in-memory tasks. |

Final Recommendation:

If you are doing any kind of numerical or scientific computing with NumPy and need to share data between processes, use multiprocessing.shared_memory. It provides the perfect balance of performance, ease of use, and integration with the Python data science ecosystem.