Of course! The term "async httpio" in Python usually refers to the modern, high-performance way of making HTTP requests using the httpx library alongside Python's asyncio framework.

Let's break this down into a comprehensive guide.

The Core Concepts: asyncio and httpx

asyncio

asyncio is Python's standard library for writing concurrent code using the async/await syntax. Instead of using multiple threads or processes, asyncio uses a single thread and an "event loop" to manage multiple tasks. When a task performs an I/O operation (like waiting for a network response), it yields control back to the event loop, allowing other tasks to run. This is incredibly efficient for I/O-bound tasks like web scraping or making many API calls.

httpx

httpx is a modern, feature-rich HTTP client library for Python. It's a spiritual successor to the very popular requests library but has a key advantage: it is built from the ground up to work with asyncio.

- Synchronous: It can be used just like

requests. - Asynchronous: It can be used with

asynciofor high-performance, concurrent requests.

Why Use async httpx? (The Big Benefit)

The primary reason to use async httpio is concurrency.

Imagine you need to fetch the content from 10 different websites.

-

Synchronous (Blocking) Approach: You would send the first request, wait for the entire response, then send the second, and so on. If each request takes 1 second, this will take 10 seconds total.

-

Asynchronous (Non-Blocking) Approach: You send all 10 requests almost simultaneously. The event loop then waits for the first response to come back. Once it does, it processes it and waits for the next one. If the responses arrive in a staggered fashion, the total time could be as little as 1 second (plus a small overhead), because you are not waiting for one request to finish before starting the next.

This is a massive performance gain when dealing with many I/O-bound operations.

Code Examples

First, you need to install httpx:

pip install httpx

Example 1: Basic Asynchronous GET Request

This is the fundamental building block. We define an async function that uses await to wait for the response from an httpx call.

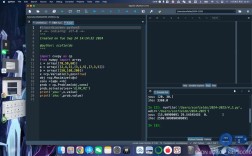

import asyncio

import httpx

async def fetch_single_url(url: str):

"""Fetches a single URL and returns its status code and text."""

print(f"Fetching {url}...")

async with httpx.AsyncClient() as client:

try:

response = await client.get(url)

# response.raise_for_status() # This will raise an exception for 4xx/5xx errors

print(f"Finished fetching {url}. Status: {response.status_code}")

return response.text

except httpx.RequestError as exc:

print(f"An error occurred while requesting {exc.request.url!r}.")

return None

async def main():

# The urls we want to fetch

urls = [

"https://httpbin.org/get",

"https://www.example.com",

"https://jsonplaceholder.typicode.com/todos/1",

]

# We need to run our async function

results = await asyncio.gather(*[fetch_single_url(url) for url in urls])

print("\n--- Results ---")

for i, result in enumerate(results):

if result:

print(f"URL {i+1} fetched successfully. Length: {len(result)} characters.")

else:

print(f"URL {i+1} failed to fetch.")

# The standard way to run the main async function

if __name__ == "__main__":

asyncio.run(main())

Key Points:

async def: Defines a coroutine.await client.get(...): This pauses thefetch_single_urlfunction until the network request is complete, without blocking the entire program.async with httpx.AsyncClient() as client:: This creates anAsyncClient(a session object) which is used to make requests. Usingasync withensures it's properly closed.asyncio.run(main()): This is the entry point to start theasyncioevent loop and run ourmaincoroutine.

Example 2: Concurrently Fetching Multiple URLs

This is where asyncio shines. We use asyncio.gather to run multiple coroutines concurrently.

import asyncio

import httpx

async def fetch_url(url: str, client: httpx.AsyncClient):

"""Fetches a single URL using a shared client."""

print(f"Requesting {url}...")

response = await client.get(url)

print(f"Got response from {url} with status {response.status_code}")

return response.text

async def main_concurrent():

urls = [

"https://httpbin.org/delay/1", # Simulates a 1-second delay

"https://httpbin.org/delay/2", # Simulates a 2-second delay

"https://httpbin.org/delay/3", # Simulates a 3-second delay

]

# Create a single client to be reused across requests (more efficient)

async with httpx.AsyncClient() as client:

# Create a list of tasks to be run concurrently

tasks = [fetch_url(url, client) for url in urls]

# asyncio.gather runs all tasks concurrently and waits for all to complete

results = await asyncio.gather(*tasks)

print("\n--- All Results ---")

for i, result in enumerate(results):

print(f"Result {i+1} length: {len(result)}")

if __name__ == "__main__":

print("Running concurrent example...")

start_time = asyncio.get_event_loop().time()

asyncio.run(main_concurrent())

end_time = asyncio.get_event_loop().time()

print(f"Total time taken: {end_time - start_time:.2f} seconds")

What to Expect:

- Sequential Time: If you ran these one by one, it would take ~1 + 2 + 3 = 6 seconds.

- Concurrent Time: When you run this, you'll see the requests start almost simultaneously. The total time will be just over 3 seconds, because the longest-running request (the 3-second one) dictates the total time.

Example 3: POSTing Data Asynchronously

Making a POST request is just as easy. You pass the data or json argument to the post method.

import asyncio

import httpx

import json

async def post_data():

url = "https://httpbin.org/post"

payload = {

"name": "John Doe",

"message": "Hello from async httpx!"

}

async with httpx.AsyncClient() as client:

# For sending JSON data, use the `json` parameter

response = await client.post(url, json=payload)

print(f"POST request to {url} completed with status {response.status_code}")

# httpbin.org returns the request data in the response

print("Server received our JSON:")

print(json.dumps(response.json(), indent=2))

if __name__ == "__main__":

asyncio.run(post_data())

Best Practices

-

Reuse the

AsyncClient: Creating a new client for every request is inefficient. The client object handles connection pooling, which means it can reuse connections to the same host, significantly reducing latency. Always create one client per "logical session" (e.g., one per API you're calling, or one for your entire script) and reuse it. -

Use

async withfor the Client: This ensures that network resources are properly closed, even if errors occur. -

Handle Exceptions: Network requests can fail for many reasons (DNS issues, connection refused, timeouts, server errors). Always wrap your

httpxcalls in atry...exceptblock to handlehttpx.RequestErrorandhttpx.HTTPStatusError. -

Set Timeouts: To prevent your program from hanging indefinitely, set timeouts on your client or individual requests.

# Set a timeout for the entire request (connect + read) async with httpx.AsyncClient(timeout=10.0) as client: response = await client.get("https://example.com") # Set separate connect and read timeouts timeouts = httpx.Timeout(connect=5.0, read=10.0) async with httpx.AsyncClient(timeout=timeouts) as client: response = await client.get("https://example.com")

Summary: requests vs. async httpx

| Feature | requests (Synchronous) |

httpx (Asynchronous) |

|---|---|---|

| Concurrency | Poor. Requires ThreadPoolExecutor or ProcessPoolExecutor. |

Excellent. Built-in asyncio support. |

| HTTP/2 | No support. | Yes, supported. |

| API | Simple, familiar. | Very similar to requests, easy to learn. |

| Use Case | Simple scripts, one-off requests, applications where concurrency isn't needed. | Web scraping, microservices, calling many APIs, high-performance applications. |