Of course! Let's dive deep into implementing the SVD++ algorithm in Python.

SVD++ is an enhancement of the classic Singular Value Decomposition (SVD) for recommendation systems. It's known for its high accuracy because it incorporates not only the explicit ratings a user gives but also their implicit feedback (e.g., which items they've clicked on, viewed, or purchased).

The Core Idea: Why SVD++?

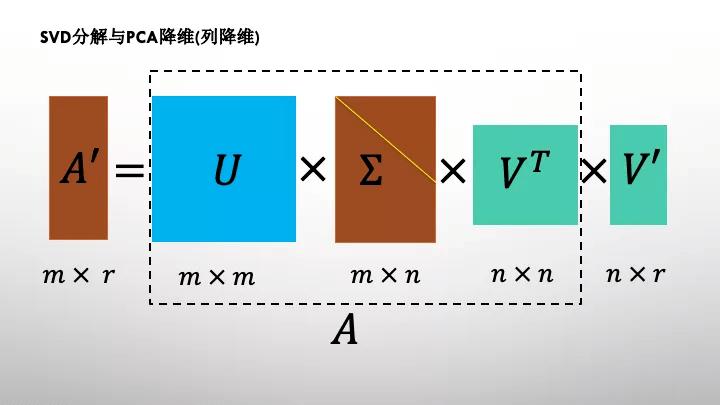

Standard SVD models a user's rating for an item r_ui as:

r_ui = μ + b_u + b_i + q_i^T (p_u - |R(u)|^(1/2) * ∑_{j∈R(u)} y_j)

Let's break this down more intuitively:

-

Standard SVD (Baseline + Latent Factors):

- The global average rating.

b_u: User bias (how this user tends to rate higher or lower than average).b_i: Item bias (how this item tends to be rated higher or lower than average).p_u: User's latent factor vector (captures a user's tastes).q_i: Item's latent factor vector (captures an item's characteristics).- The prediction is

μ + b_u + b_i + p_u · q_i.

-

The "++" Enhancement (Implicit Feedback): SVD++ argues that a user's choice to interact with an item (even without rating it) is valuable information. This is implicit feedback.

R(u): The set of items that useruhas interacted with (rated or not).y_j: An implicit factor vector for itemj. This vector represents the "influence" or "essence" of itemjon a user's overall taste profile.∑_{j∈R(u)} y_j: This term aggregates the influence of all items a user has interacted with. It creates a "taste profile" vector for the user based on their entire history.|R(u)|^(1/2) * ∑_{j∈R(u)} y_j: This is a normalization factor. The more items a user has rated, the less each individual rating should influence their overall taste profile.

In simple terms: SVD++ doesn't just look at a user's explicit ratings (p_u) to predict a new rating. It also looks at the user's entire interaction history (∑ y_j) to build a more complete picture of their preferences. This makes it more robust and powerful.

Implementation from Scratch (The Math Way)

This implementation will follow the original SVD++ paper by Yehuda Koren. We'll use numpy for efficient matrix operations.

Step 1: Setup and Data Preparation

First, let's create a small, sample dataset. We'll use a dictionary of users, items, and ratings. We also need to map user/item IDs to integer indices for our matrices.

import numpy as np

from collections import defaultdict

# Sample data: {user_id: {item_id: rating}}

data = {

'User1': {'ItemA': 5, 'ItemB': 3, 'ItemC': 4},

'User2': {'ItemA': 4, 'ItemB': 5, 'ItemD': 2},

'User3': {'ItemC': 3, 'ItemD': 5, 'ItemE': 4},

'User4': {'ItemA': 2, 'ItemC': 1, 'ItemE': 5},

}

# Create mappings for users and items to integer indices

user_ids = list(data.keys())

item_ids = list(set(item for user_items in data.values() for item in user_items.keys()))

user_to_index = {user: idx for idx, user in enumerate(user_ids)}

item_to_index = {item: idx for idx, item in enumerate(item_ids)}

index_to_item = {idx: item for item, idx in item_to_index.items()}

num_users = len(user_ids)

num_items = len(item_ids)

print(f"Number of users: {num_users}, Number of items: {num_items}")

# Create a rating matrix (users x items) and a binary interaction matrix

ratings_matrix = np.zeros((num_users, num_items))

interaction_matrix = np.zeros((num_users, num_items), dtype=bool)

for user, items in data.items():

u_idx = user_to_index[user]

for item, rating in items.items():

i_idx = item_to_index[item]

ratings_matrix[u_idx, i_idx] = rating

interaction_matrix[u_idx, i_idx] = True

# Global average rating

mu = np.mean(ratings_matrix[ratings_matrix > 0])

print(f"Global average rating (mu): {mu:.2f}")

Step 2: Initialize Parameters

We need to initialize the biases and latent factor vectors with small random values.

# Hyperparameters K = 10 # Number of latent factors lr = 0.01 # Learning rate lambda_reg = 0.1 # Regularization parameter num_epochs = 100 # Initialize parameters # User biases (b_u) b_u = np.zeros(num_users) # Item biases (b_i) b_i = np.zeros(num_items) # User latent factors (p_u) p_u = np.random.normal(scale=1./K, size=(num_users, K)) # Item latent factors (q_i) q_i = np.random.normal(scale=1./K, size=(num_items, K)) # Implicit item factors (y_j) y_j = np.random.normal(scale=1./K, size=(num_items, K))

Step 3: The Training Loop (Gradient Descent)

This is the core of the algorithm. We'll iterate over the known ratings and update our parameters to minimize the prediction error.

def get_user_interaction_sum(user_idx):

"""Calculates the sum of implicit factors for items a user has interacted with."""

interacted_items_indices = np.where(interaction_matrix[user_idx] == True)[0]

if len(interacted_items_indices) == 0:

return np.zeros(K)

return np.sum(y_j[interacted_items_indices], axis=0)

for epoch in range(num_epochs):

total_loss = 0

# Iterate over every known rating

for u_idx in range(num_users):

for i_idx in range(num_items):

if ratings_matrix[u_idx, i_idx] > 0:

r_ui = ratings_matrix[u_idx, i_idx]

# Get the sum of implicit factors for the user

sum_y_j = get_user_interaction_sum(u_idx)

# Calculate the prediction

# p_u_hat is the effective user vector

p_u_hat = p_u[u_idx] + (1 / np.sqrt(len(np.where(interaction_matrix[u_idx])[0]) + 1e-9)) * sum_y_j

pred = mu + b_u[u_idx] + b_i[i_idx] + np.dot(p_u_hat, q_i[i_idx])

# Calculate the error

e_ui = r_ui - pred

# Update parameters using gradient descent

# Update biases

b_u[u_idx] += lr * (e_ui - lambda_reg * b_u[u_idx])

b_i[i_idx] += lr * (e_ui - lambda_reg * b_i[i_idx])

# Update latent factors

p_u[u_idx] += lr * (e_ui * q_i[i_idx] - lambda_reg * p_u[u_idx])

q_i[i_idx] += lr * (e_ui * p_u_hat - lambda_reg * q_i[i_idx])

# Update implicit factors y_j for all items the user has interacted with

interacted_items_indices = np.where(interaction_matrix[u_idx] == True)[0]

for j_idx in interacted_items_indices:

y_j[j_idx] += lr * ( (e_ui / np.sqrt(len(interacted_items_indices) + 1e-9)) * q_i[i_idx] - lambda_reg * y_j[j_idx])

total_loss += e_ui**2

rmse = np.sqrt(total_loss / np.sum(ratings_matrix > 0))

if (epoch + 1) % 10 == 0:

print(f"Epoch {epoch+1}/{num_epochs}, RMSE: {rmse:.4f}")

Step 4: Making Predictions

Now that our model is trained, we can write a function to predict a rating for any user-item pair.

def predict_rating(user_id, item_id):

"""Predicts a rating for a given user and item."""

if user_id not in user_to_index or item_id not in item_to_index:

return mu # Return global average if user/item is unknown

u_idx = user_to_index[user_id]

i_idx = item_to_index[item_id]

# Get the sum of implicit factors for the user

sum_y_j = get_user_interaction_sum(u_idx)

# Calculate the effective user vector

p_u_hat = p_u[u_idx] + (1 / np.sqrt(len(np.where(interaction_matrix[u_idx])[0]) + 1e-9)) * sum_y_j

# Make the prediction

prediction = mu + b_u[u_idx] + b_i[i_idx] + np.dot(p_u_hat, q_i[i_idx])

return prediction

# --- Example Predictions ---

print("\n--- Making Predictions ---")

# Prediction for a known rating

pred_known = predict_rating('User1', 'ItemA')

print(f"Predicted rating for User1 on ItemA: {pred_known:.2f} (Actual: 5)")

# Prediction for a missing rating

pred_missing = predict_rating('User1', 'ItemD')

print(f"Predicted rating for User1 on ItemD: {pred_missing:.2f}")

# Prediction for a new user

pred_new_user = predict_rating('NewUser', 'ItemA')

print(f"Predicted rating for NewUser on ItemA: {pred_new_user:.2f} (Actual: 4)")

Implementation using surprise

For any real-world application, you should use a well-tested library like scikit-surprise (or surprise). It's optimized, efficient, and handles many of the low-level details for you.

First, install it:

pip install scikit-surprise

Step 1: Load Data and Create SVD++ Model

surprise makes this incredibly simple.

from surprise import Dataset, SVDpp, accuracy

from surprise.model_selection import train_test_split

# Load the built-in MovieLens 100k dataset

# You can also load your own data using Reader and Dataset.load_from_df

data = Dataset.load_builtin('ml-100k')

# Train/test split

trainset, testset = train_test_split(data, test_size=0.25)

# Instantiate the SVDpp algorithm

# The parameters (n_factors, lr_all, reg_all) can be tuned

svdpp = SVDpp(n_factors=50, lr_all=0.005, reg_all=0.02)

# Train the model

print("Training SVDpp model...")

svdpp.fit(trainset)

print("Training complete.")

Step 2: Evaluate the Model

# Evaluate on the test set

predictions = svdpp.test(testset)

rmse = accuracy.rmse(predictions)

mae = accuracy.mae(predictions)

print(f"\nSVDpp Test RMSE: {rmse:.4f}")

print(f"SVDpp Test MAE: {mae:.4f}")

Step 3: Make Predictions for Specific Users/Items

# Get a specific user and item ID from the dataset

# (You can find these in the 'ml-100k/u.data' file)

uid = str(196) # User ID

iid = str(302) # Item ID (movie ID)

# Get the raw rating for this pair (if it exists)

raw_user_id = trainset.to_raw_uid(uid)

raw_item_id = trainset.to_raw_iid(iid)

print(f"\nPredicting for User '{raw_user_id}' and Item '{raw_item_id}'...")

# Predict the rating

pred = svdpp.predict(uid, iid, r_ui=4.0, verbose=True) # r_ui is optional, just for display

Summary and Comparison

| Feature | SVD (from scratch) | SVD++ (from scratch) | surprise.SVDpp |

|---|---|---|---|

| Core Idea | Models users/items with latent factors. | Adds implicit feedback to the user profile. | A robust, production-ready implementation of SVD++. |

| Complexity | Moderate. Requires understanding of matrix factorization. | High. More complex due to the implicit feedback term. | Low. Abstracts away all complexity. |

| Data | Requires explicit ratings. | Requires explicit ratings + implicit interactions. | Handles both seamlessly. |

| Code | Lots of code for data prep, training, and prediction. | Even more code due to handling of y_j factors. |

A few lines of code to train and predict. |

| Best For | Learning the fundamentals of matrix factorization. | Learning the advanced SVD++ algorithm in detail. | Real-world applications, competitions, and production systems. |

Recommendation:

- To learn: Implementing it from scratch is an excellent exercise to truly understand what's happening under the hood.

- To build: Always use a library like

scikit-surprise. It's faster, more stable, and less prone to bugs. You can spend your time on feature engineering, model selection, and tuning instead of on the implementation details.