Of course! A Python rate limiter is a crucial tool for controlling the rate of API calls, web scraping, or any task where you need to prevent overwhelming a server or getting blocked.

Here's a comprehensive guide covering the most common and effective ways to implement rate limiting in Python, from simple manual solutions to powerful libraries.

The Simple (But Limited) Approach: time.sleep()

This is the most basic method. You execute an action, then pause for a fixed amount of time before the next one.

How it works: You calculate the time since your last request and sleep for the remaining time if you're going too fast.

Pros:

- Extremely simple to understand and implement.

- No external libraries needed.

Cons:

- Inflexible: It introduces a fixed delay after every single request, which is often inefficient. You might be able to make 10 requests in a second, but this method forces you to wait 0.1 seconds after each one, leading to a total of 1 second for 10 requests instead of a burst of 10 requests in a shorter timeframe.

- Doesn't handle bursts: It doesn't allow for a "burst" of requests, even if your rate limit permits it.

Example:

import time

import requests

API_URL = "https://api.example.com/data"

def make_request_with_fixed_delay():

"""Makes a request and then waits for a fixed delay."""

try:

response = requests.get(API_URL)

response.raise_for_status() # Raise an exception for bad status codes

print(f"Request successful at {time.time()}: {response.status_code}")

return response.json()

except requests.exceptions.RequestException as e:

print(f"Request failed: {e}")

# Wait for 0.5 seconds after every request

time.sleep(0.5)

# --- Usage ---

for i in range(5):

make_request_with_fixed_delay()

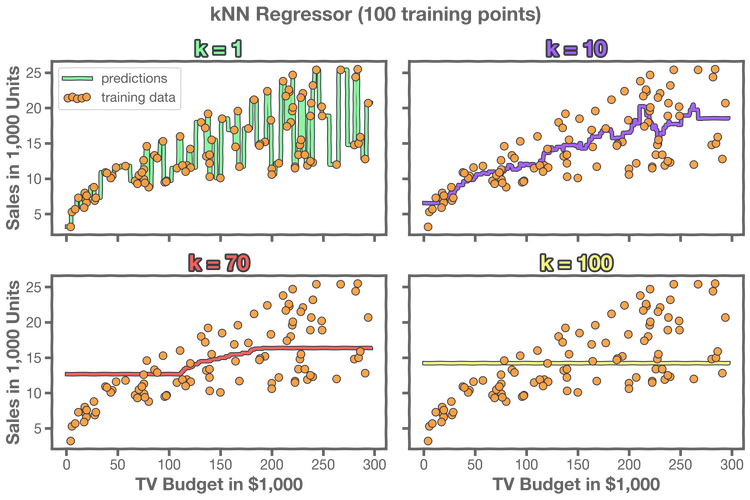

The Smarter Approach: The Token Bucket Algorithm

This is a classic and highly effective algorithm for rate limiting. It's much more flexible than time.sleep().

How it works: Imagine a bucket that holds tokens.

- Tokens are added to the bucket at a fixed rate (e.g., 10 tokens per second).

- Each request consumes one token from the bucket.

- If the bucket is empty, the request must wait until a token is available.

- The bucket has a maximum capacity, allowing for "bursts" of requests.

This perfectly models many real-world API rate limits (e.g., "100 requests per minute, with a burst of 10").

Pros:

- Flexible: Allows for controlled bursts of requests.

- Efficient: Only pauses when necessary.

- Models real-world limits well.

Cons:

- More complex to implement correctly than

time.sleep().

Example Implementation:

import time

import threading

class TokenBucket:

def __init__(self, rate, capacity):

"""

:param rate: The rate at which tokens are added (tokens per second).

:param capacity: The maximum number of tokens the bucket can hold.

"""

self._rate = rate

self._capacity = capacity

self._tokens = capacity

self._last_refill_timestamp = time.time()

self._lock = threading.Lock()

def _refill(self):

"""Refills the bucket with tokens based on elapsed time."""

now = time.time()

time_passed = now - self._last_refill_timestamp

tokens_to_add = time_passed * self._rate

with self._lock:

self._tokens = min(self._capacity, self._tokens + tokens_to_add)

self._last_refill_timestamp = now

def consume(self, tokens=1):

"""

Consumes a number of tokens from the bucket.

:param tokens: The number of tokens to consume.

:return: True if tokens were consumed, False otherwise.

"""

self._refill()

with self._lock:

if self._tokens >= tokens:

self._tokens -= tokens

return True

return False

# --- Usage ---

# Rate: 10 requests per second. Capacity: 5 (allows a burst of 5)

rate_limiter = TokenBucket(rate=10, capacity=5)

def make_request_with_token_bucket():

while not rate_limiter.consume(1):

print("Bucket is empty. Waiting...")

time.sleep(0.1) # Wait a bit before trying again

print(f"Token consumed. Making request at {time.time()}")

# In a real scenario, you would make your API call here.

# requests.get(API_URL)

time.sleep(0.01) # Simulate work

# --- Simulate concurrent requests ---

threads = []

for i in range(10):

thread = threading.Thread(target=make_request_with_token_bucket)

threads.append(thread)

thread.start()

for thread in threads:

thread.join()

print("All requests processed.")

The Recommended Approach: Use a Library

For production code, it's almost always better to use a well-tested, feature-rich library. They handle edge cases, are thread-safe, and are often more efficient.

Here are two of the most popular libraries.

Option A: ratelimit

A simple, no-frills decorator-based library. It's perfect for straightforward rate limiting on functions.

Installation:

pip install ratelimit

Example:

from ratelimit import limits, sleep_and_retry

import time

import requests

# Define the rate limit: 10 calls per 10 seconds

CALLS_PER_10_SECONDS = 10

# This decorator will raise an exception if the limit is exceeded

@limits(calls=CALLS_PER_10_SECONDS, period=10)

def limited_api_call():

"""Makes a single API call, rate-limited by the decorator."""

print(f"Making request at {time.time()}")

# In a real app:

# response = requests.get("https://api.example.com/data")

# return response.json()

return "success"

# This decorator will wait until the rate limit window resets

@sleep_and_retry

@limits(calls=CALLS_PER_10_SECONDS, period=10)

def limited_api_call_with_wait():

"""Makes a single API call, waits if the limit is hit."""

print(f"Making request (with wait) at {time.time()}")

# In a real app:

# response = requests.get("https://api.example.com/data")

# return response.json()

return "success"

# --- Usage ---

print("--- Testing @limits (will raise exception) ---")

try:

for i in range(15):

limited_api_call()

except Exception as e:

print(f"Caught expected exception: {e}")

print("\n--- Testing @sleep_and_retry (will wait) ---")

start_time = time.time()

for i in range(15):

limited_api_call_with_wait()

end_time = time.time()

print(f"Total time for 15 calls (should be > 10s): {end_time - start_time:.2f} seconds")

Option B: pyrate-limiter

A more powerful and versatile library. It supports multiple rate-limiting algorithms (Token Bucket, Fixed Window, etc.) and is designed for high-concurrency applications.

Installation:

pip install pyrate-limiter

Example (using the BucketFullException and sleep_and_retry pattern):

from pyrate_limiter import Limiter, Duration, RequestRate

from pyrate_limiter.exceptions import BucketFullException

import time

import requests

# Define the rate limit: 10 calls per 10 seconds

# This creates a "Bucket" for the rate limit

rate = RequestRate(10, Duration.SECOND * 10)

limiter = Limiter(rate)

def make_request_with_pyrate_limiter():

try:

# Acquire a slot from the limiter

limiter.try_acquire()

print(f"Making request at {time.time()}")

# In a real app:

# response = requests.get("https://api.example.com/data")

# return response.json()

return "success"

except BucketFullException:

print("Rate limit exceeded. Waiting...")

# Get the time remaining until the next slot is available

time_to_wait = limiter.get_time_remaining()

print(f"Waiting for {time_to_wait:.2f} seconds.")

time.sleep(time_to_wait)

# After waiting, try again (recursive call)

return make_request_with_pyrate_limiter()

# --- Usage ---

start_time = time.time()

for i in range(15):

make_request_with_pyrate_limiter()

end_time = time.time()

print(f"\nTotal time for 15 calls (should be > 10s): {end_time - start_time:.2f} seconds")

Summary: Which One Should You Use?

| Method | Best For | Pros | Cons |

|---|---|---|---|

time.sleep() |

Quick scripts, learning, simple tasks. | Simple, no dependencies. | Inflexible, inefficient, not for production. |

| Token Bucket (Manual) | Understanding the core algorithm, specific needs. | Flexible, models real limits well. | Complex to implement correctly (threading). |

ratelimit Library |

Simple function-level rate limiting. | Easy decorator syntax, sleep_and_retry option. |

Less flexible for complex scenarios. |

pyrate-limiter Library |

Production applications, high concurrency, multiple algorithms. | Powerful, efficient, thread-safe, many algorithms. | Slightly more complex to set up initially. |

Recommendation:

- For learning or a simple script, start with the manual

Token Bucketto understand how it works. - For most production applications,

pyrate-limiteris the best choice due to its power, efficiency, and robustness. - If your needs are very simple and you just need to decorate a few functions,

ratelimitis an excellent and easy-to-use alternative.