Of course! The term tocategorical in Python is most famously associated with the Keras library, which is a high-level API for building neural networks. The function you're looking for is tf.keras.utils.to_categorical.

Let's break it down in detail.

What is to_categorical?

to_categorical is a utility function that converts a vector of integer labels (class indices) into a binary matrix (one-hot encoded matrix).

Why is this needed?

Many machine learning models, especially those for multi-class classification, require the target labels to be in a one-hot encoded format. This is different from a simple integer label (e.g., 0, 1, 2).

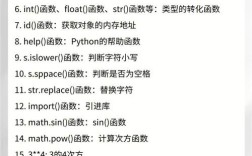

- Integer Label (Sparse): Represents a class with a single number.

[0, 1, 2, 1] - One-Hot Encoded Label (Dense): Represents a class with a vector where only the index corresponding to the class is

1, and all other entries are0.

The Problem: Integer Labels vs. One-Hot Encoding

Imagine you have 3 classes: Cat (0), Dog (1), and Fish (2).

Your model's final layer might output 3 values, representing the "confidence" for each class.

Scenario 1: Using Integer Labels (Not Recommended for Final Layer)

If you use integer labels, your model might try to predict a single number. How would it interpret the output [0.1, 0.7, 0.2]?

- Is it predicting class 1 (Dog)?

- Or is it trying to predict a continuous value between 0 and 2? This is ambiguous and doesn't align with the goal of classification.

Scenario 2: Using One-Hot Encoded Labels (Correct Approach)

Your labels would look like this:

0->[1, 0, 0](Cat)1->[0, 1, 0](Dog)2->[0, 0, 1](Fish)

Now, the model's output [0.1, 0.7, 0.2] is compared directly to the target label [0, 1, 0]. The model learns to maximize the output at the correct index (index 1 in this case). This is a clear and well-defined problem.

How to Use tf.keras.utils.to_categorical

Here is a step-by-step guide with code examples.

Installation

First, make sure you have TensorFlow installed:

pip install tensorflow

Basic Example

Let's convert a simple list of integers into a one-hot encoded matrix.

import tensorflow as tf

import numpy as np

# Our integer labels. 4 samples, 3 classes (0, 1, 2)

labels = [0, 1, 2, 1]

# Get the number of unique classes

num_classes = len(np.unique(labels)) # This will be 3

# Use the to_categorical function

one_hot_labels = tf.keras.utils.to_categorical(labels, num_classes=num_classes)

print("Original Labels:", labels)

print("One-Hot Encoded Labels:")

print(one_hot_labels)

Output:

Original Labels: [0, 1, 2, 1]

One-Hot Encoded Labels:

[[1. 0. 0.]

[0. 1. 0.]

[0. 0. 1.]

[0. 1. 0.]]Detailed Explanation of Parameters

The function signature is:

tf.keras.utils.to_categorical(y, num_classes=None, dtype='float32')

y: Your integer labels. This can be a list, a NumPy array, or a TensorFlow tensor.num_classes: (Optional) The total number of classes. IfNone, it will be inferred from the data asmax(y) + 1. It's good practice to specify it explicitly for clarity.dtype: The data type of the output matrix.'float32'is common for neural network targets.'int32'or'uint8'can also be used if you don't need floating-point numbers.

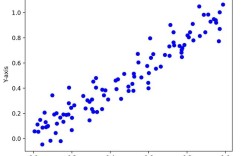

A More Realistic Example (with a Dataset)

Let's see how you would use this in a typical machine learning workflow.

import tensorflow as tf

import numpy as np

# 1. Sample Data

# Let's say we have 100 samples, and they belong to one of 5 classes (0-4)

num_samples = 100

num_classes = 5

y_integer = np.random.randint(0, num_classes, size=num_samples)

print("First 5 original labels:", y_integer[:5])

# 2. Convert to One-Hot Encoding

y_one_hot = tf.keras.utils.to_categorical(y_integer, num_classes=num_classes)

print("\nShape of original labels:", y_integer.shape)

print("Shape of one-hot labels:", y_one_hot.shape)

print("\nFirst 5 one-hot encoded labels:")

print(y_one_hot[:5])

Output:

First 5 original labels: [3 1 0 2 4]

Shape of original labels: (100,)

Shape of one-hot labels: (100, 5)

First 5 one-hot encoded labels:

[[0. 0. 0. 1. 0.]

[0. 1. 0. 0. 0.]

[1. 0. 0. 0. 0.]

[0. 0. 1. 0. 0.]

[0. 0. 0. 0. 1.]]The Reverse: From One-Hot Back to Integers

Sometimes you need to convert the model's predictions (which are one-hot encoded) back to a single class index for evaluation or interpretation. You can do this easily with numpy.argmax.

# Model's prediction for a single sample (e.g., the first one)

prediction = np.array([0.1, 0.05, 0.1, 0.7, 0.05]) # High confidence for class 3

# Convert the one-hot prediction back to an integer label

predicted_class_index = np.argmax(prediction)

print("Model Prediction (probabilities):", prediction)

print("Predicted Class Index:", predicted_class_index) # Output will be 3

# If you have a full batch of predictions:

batch_predictions = np.array([

[0.1, 0.8, 0.1], # Predicts class 1

[0.9, 0.05, 0.05], # Predicts class 0

[0.2, 0.2, 0.6] # Predicts class 2

])

predicted_batch_indices = np.argmax(batch_predictions, axis=1) # Use axis=1 to find max in each row

print("\nBatch Predictions:")

print(batch_predictions)

print("\nPredicted Class Indices for the batch:", predicted_batch_indices)

Output:

Model Prediction (probabilities): [0.1 0.05 0.1 0.7 0.05]

Predicted Class Index: 3

Batch Predictions:

[[0.1 0.8 0.1 ]

[0.9 0.05 0.05]

[0.2 0.2 0.6 ]]

Predicted Class Indices for the batch: [1 0 2]Summary

| Task | Function/Method | When to Use |

|---|---|---|

| Convert Integers -> One-Hot | tf.keras.utils.to_categorical(y, num_classes) |

Essential for preparing labels for the loss function (e.g., categorical_crossentropy) in classification tasks. |

| Convert One-Hot -> Integers | np.argmax(one_hot_vector, axis=1) |

To interpret the model's final predictions into a single class index for metrics like accuracy or for display. |

| Convert Integers -> Sparse | tf.keras.losses.SparseCategoricalCrossentropy() |

An alternative to to_categorical. Use this loss function if you keep your labels as integers. This can be more memory-efficient. |