Of course! Let's break down the relationship between Python, Apache Storm, and Hadoop. This is a classic topic in big data processing, as these three technologies were often used together in the "old" (but still relevant) big data stack.

Here’s a comprehensive guide covering what each is, how they work together, and the modern alternatives.

The Three Players: A Quick Refresher

Python

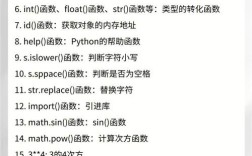

- What it is: A high-level, general-purpose programming language.

- Key Feature: Extremely versatile, with a massive ecosystem of libraries for everything from web development (Django, Flask) to data science (Pandas, NumPy, Scikit-learn) and machine learning (TensorFlow, PyTorch).

- Role in the Stack: It's the "glue" or the "language of implementation". You use Python to write the business logic that runs inside your big data frameworks.

Apache Storm

- What it is: A real-time, distributed, fault-tolerant computation system. It's designed for processing unbounded streams of data as they arrive.

- Key Concept: The Stream Processing model. Data flows continuously through a topology of processing components.

- Core Components:

- Spout: A source of data streams (e.g., reading from a Kafka topic, a Twitter feed, or a network socket).

- Bolt: A processing unit that consumes data from one or more streams, performs a function (filtering, transforming, aggregating), and emits new streams.

- Topology: The entire graph of Spouts and Bolts, wired together. This is your "Storm application."

- Analogy: Think of Storm as a massive, assembly line for data. Each Bolt is a worker on the line who takes a part (data), modifies it, and passes it to the next worker.

Hadoop (Specifically, Hadoop MapReduce)

- What it is: A distributed storage and batch processing framework. It's designed for processing large, bounded datasets stored on disk.

- Key Concept: The Batch Processing model. You have a fixed dataset, and you run a job to process it from start to finish. The job can take minutes, hours, or even days.

- Core Components:

- HDFS (Hadoop Distributed File System): The distributed storage layer that breaks files into blocks and replicates them across a cluster.

- YARN (Yet Another Resource Negotiator): The cluster resource manager that allocates CPU and memory to applications.

- MapReduce: The processing engine. A job is split into two phases:

- Map: Processes input data in parallel and emits intermediate key-value pairs.

- Reduce: Aggregates all intermediate values for a given key.

- Analogy: Think of Hadoop MapReduce as a giant, annual inventory count. You have a massive warehouse of data (HDFS), and you dispatch teams (Map tasks) to go through every item, sort them, and then a final team (Reduce tasks) to tally up the results for each category.

How Python, Storm, and Hadoop Work Together

The key to understanding their relationship is to recognize that Storm and Hadoop MapReduce solve two different, but often complementary, problems.

- Storm = Real-time Processing: "What is happening right now?"

- Hadoop = Batch Processing: "What happened over the last day/hour/week?"

Together, they form a powerful Lambda Architecture.

The Lambda Architecture

The Lambda Architecture is a data processing architecture designed to handle both real-time and batch data to produce a complete and accurate view of data in a system. It has three layers:

-

Batch Layer (Hadoop):

- Role: Stores all the raw, immutable data and pre-computes the "batch views" (e.g., daily totals, user profiles).

- How it fits: Hadoop MapReduce jobs run periodically (e.g., every night) on the entire historical dataset stored in HDFS. This is slow but incredibly accurate.

-

Speed Layer (Storm):

- Role: Computes real-time views on the most recent data as it arrives.

- How it fits: A Storm topology processes the live data stream. It can answer questions like "How many users are active right now?" or "What are the trending topics in the last 5 minutes?". This view is fast but might be slightly stale or incomplete.

-

Serving Layer:

- Role: Combines the results from the Batch and Speed layers to serve queries to users or applications.

- How it fits: A database (like Apache Cassandra or HBase) stores the views from both layers. When a query comes in, the system fetches the most recent real-time data from the Speed Layer and merges it with the comprehensive historical data from the Batch Layer.

Concrete Example: Real-time Analytics for a Website

Imagine you want to analyze user behavior on a large e-commerce site.

- Data Ingestion: User clickstream events (page views, clicks, purchases) are generated and sent to a message queue like Apache Kafka.

- Speed Layer (Storm):

- A Python Storm topology is built.

- A Spout reads from Kafka.

- Bolt 1 (Python): Parses the raw event data.

- Bolt 2 (Python): Calculates real-time metrics, like "number of active users in the last minute" or "real-time sales per second."

- These real-time metrics are displayed on a live dashboard for the marketing team.

- Batch Layer (Hadoop):

- The same raw clickstream events are also written to HDFS.

- Every night, a Python Hadoop MapReduce job runs.

- Mapper (Python): Reads all events for the day and emits key-value pairs like

(user_id, product_id). - Reducer (Python): Aggregates this data to compute complex, historical reports, like "top 10 selling products for each user segment over the last month" or "customer lifetime value."

- Serving Layer:

- The results from the nightly Hadoop job are loaded into a data warehouse.

- The live dashboard might show the real-time sales (from Storm) alongside the total sales for the day (pulled from the data warehouse).

How to Use Python with Storm and Hadoop

Python with Storm

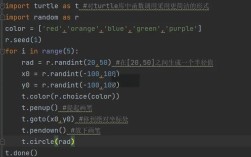

The most common way to write Storm topologies in Python is using streamparse. It's a library that makes it easy to create and submit Python-based topologies to a Storm cluster.

Example streamparse Bolt:

# In a file called bolts.py

from streamparse import Bolt

class WordCountBolt(Bolt):

# This bolt will count the occurrences of each word

outputs = ['word', 'count']

def initialize(self, conf, ctx):

self.counts = {} # Use a dictionary to store counts in memory

def process(self, tup):

word = tup.values[0]

# If we've seen the word before, increment the count

self.counts[word] = self.counts.get(word, 0) + 1

# Emit the word and its new count

self.emit([word, self.counts[word]])

You would then define a topology in a separate file that connects a Spout (e.g., reading from a file) to this WordCountBolt.

Python with Hadoop (MapReduce)

You can write MapReduce jobs in Python using Hadoop Streaming. This utility allows you to create and run MapReduce jobs with any executable or script as the mapper or reducer.

Example: Word Count with Python and Hadoop Streaming

-

Mapper (

mapper.py): Reads a line from standard input, splits it into words, and emits each word with a count of 1.# mapper.py # #!/usr/bin/env python import sys for line in sys.stdin: # Remove leading and trailing whitespace line = line.strip() # Split the line into words words = line.split() # Output each word with a count of 1 for word in words: print(f"{word}\t1") -

Reducer (

reducer.py): Reads the word/count pairs from the mapper, sums the counts for each word, and outputs the final result.# reducer.py # #!/usr/bin/env python import sys current_word = None current_count = 0 word = None for line in sys.stdin: # Parse the input from mapper word, count = line.split('\t') try: count = int(count) except ValueError: continue # Skip if count is not an integer # This part handles the aggregation logic if current_word == word: current_count += count else: if current_word: # Output the count for the previous word print(f"{current_word}\t{current_count}") current_count = count current_word = word # Output the last word if current_word == word: print(f"{current_word}\t{current_count}") -

Run the Job on Hadoop:

# Make the scripts executable chmod +x mapper.py reducer.py # Run the Hadoop Streaming job hadoop jar $HADOOP_HOME/hadoop-streaming.jar \ -input /input/path/to/textfiles \ -output /output/path \ -mapper ./mapper.py \ -reducer ./reducer.py \ -file mapper.py \ -file reducer.py

The Modern Context: Is this Stack Still Relevant?

This combined stack was very popular in the early-to-mid 2010s. However, the big data landscape has evolved significantly.

Why the "Storm + Hadoop" model is less common today:

- The Rise of Unified Engines: Frameworks like Apache Flink and Apache Spark can handle both batch and stream processing in a single, unified API. This simplifies the architecture immensely, removing the need for a separate Lambda Architecture with two different processing systems.

- Hadoop MapReduce is Mostly Obsolete: For most use cases, Spark has replaced MapReduce as the primary batch processing engine on Hadoop. Spark's in-memory processing is significantly faster than MapReduce's disk-based approach.

- Simpler Stream Processing: For many simple streaming tasks, lightweight tools like Apache Kafka Streams or even serverless functions (AWS Lambda, Google Cloud Functions) are sufficient and easier to manage than a full Storm cluster.

The Modern Equivalent:

The modern stack looks more like this:

- Storage: HDFS, cloud storage (S3, GCS), or object stores.

- Unified Processing: Apache Spark (with its Structured Streaming module) is the dominant choice. It can run batch jobs and sophisticated stream processing pipelines.

- Message Queue: Apache Kafka remains the de-facto standard for ingesting and buffering data streams.

- Language: Python is more popular than ever in this space, thanks to libraries like PySpark.

Summary Table

| Feature | Apache Storm | Hadoop MapReduce | Modern Alternative (Spark) |

|---|---|---|---|

| Processing Model | Real-time, Unbounded Streams | Batch, Bounded Datasets | Unified (Batch & Streaming) |

| Latency | Milliseconds to Seconds | Minutes to Hours | Low (Streaming) to High (Batch) |

| Data Source | Live streams (Kafka, etc.) | Files on HDFS | Files, HDFS, Kafka, Databases |

| Fault Tolerance | At-least-once processing | Exactly-once processing | Exactly-once processing |

| Python Integration | streamparse library |

Hadoop Streaming |

PySpark (Excellent, first-class) |

| Primary Use Case | Live dashboards, fraud detection, real-time alerts | Large-scale ETL, historical analysis | Most modern data pipelines |