Of course! Working with punctuation is a common task in Python, especially in text processing, data cleaning, and natural language processing (NLP). Here’s a comprehensive guide covering different methods, from simple string operations to powerful libraries.

Summary of Methods

| Method | Best For | Pros | Cons |

|---|---|---|---|

string.punctuation |

Simple, common punctuation removal. | Built-in, no imports needed, fast. | Doesn't handle all Unicode punctuation. |

| Regular Expressions | Complex pattern matching (e.g., keeping hyphens). | Extremely powerful and flexible. | Can be slower and harder to read. |

str.translate() |

High-performance bulk removal of many characters. | Very fast for large texts. | Requires creating a translation table. |

| NLP Libraries (NLTK, spaCy) | Advanced NLP tasks (tokenization, lemmatization). | Context-aware, handles complex cases. | Overkill for simple removal, requires installation. |

Method 1: Using string.punctuation (The Simplest Way)

Python's standard library has a pre-defined string containing all common ASCII punctuation characters. This is the most straightforward method for basic cleaning.

Step 1: Import the string module

import string

Step 2: See what characters are considered punctuation

print(string.punctuation)

Output:

!"#$%&'()*+,-./:;<=>?@[\]^_`{|}~Step 3: Remove Punctuation from a String

You can iterate through your string and build a new one, excluding any punctuation characters.

Example: Using a for loop

import string

text = "Hello, World! This is a test... with some punctuation, right?"

# Create a translation table that maps each punctuation character to None

translator = str.maketrans('', '', string.punctuation)

# Use the translate method to remove punctuation

cleaned_text = text.translate(translator)

print(f"Original: {text}")

print(f"Cleaned: {cleaned_text}")

Output:

Original: Hello, World! This is a test... with some punctuation, right?

Cleaned: Hello World This is a test with some punctuation rightWhy str.translate() is better than a for loop here:

While a for loop works, str.translate() is highly optimized and significantly faster, especially for large amounts of text.

Method 2: Using Regular Expressions (The Powerful Way)

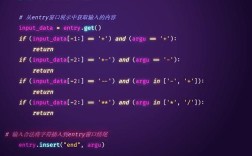

Regular expressions (regex) are perfect for when you need more control. For example, what if you want to keep hyphens () and apostrophes () because they are part of words like "state-of-the-art" or "don't"?

The re module in Python's standard library is used for this.

Step 1: Import the re module

import re

Step 2: Define a Pattern and Use re.sub()

We'll use re.sub() to substitute (replace) any character that matches our pattern with an empty string.

Example: Removing ALL punctuation

This is similar to the string.punctuation method but uses regex syntax.

import re

text = "Hello, World! This is a test... with some punctuation, right?"

# The pattern [^\w\s] matches any character that is NOT a word character (\w) or whitespace (\s)

cleaned_text = re.sub(r'[^\w\s]', '', text)

print(f"Original: {text}")

print(f"Cleaned: {cleaned_text}")

Output:

Original: Hello, World! This is a test... with some punctuation, right?

Cleaned: Hello World This is a test with some punctuation rightExample: Keeping Hyphens and Apostrophes

This is where regex shines. We can explicitly list the characters we want to keep inside square brackets [].

import re

text = "This is a state-of-the-art example, don't you think?"

# The pattern [!"#$%&()*+,./:;<=>?@[\]^`{|}~] removes all punctuation EXCEPT hyphen and apostrophe

# Note: We have to escape the hyphen with a backslash \- or place it at the end of the set.

cleaned_text = re.sub(r'[!"#$%&()*+,./:;<=>?@[\]^`{|}~]', '', text)

print(f"Original: {text}")

print(f"Cleaned: {cleaned_text}")

Output:

Original: This is a state-of-the-art example, don't you think?

Cleaned: This is a state-of-the-art example dont you thinkNotice how "state-of-the-art" and "don't" are preserved.

Method 3: Handling Unicode Punctuation

The string.punctuation constant only covers ASCII punctuation. If you're working with text that might contain other characters like (French guillemets), (Spanish inverted question mark), or (ellipsis), you'll need a different approach.

The best way to handle this is with the regex library (a more powerful version of Python's built-in re) or by using Unicode properties.

Using the regex Library (Recommended)

First, you might need to install it:

pip install regex

Then, you can use its Unicode property support \p{P} to match any kind of punctuation.

# pip install regex

import regex

text = "¡Hola! ¿Qué tal? This is an ellipsis… and French «quotes»."

# \p{P} matches any Unicode punctuation character

cleaned_text = regex.sub(r'\p{P}', '', text)

print(f"Original: {text}")

print(f"Cleaned: {cleaned_text}")

Output:

Original: ¡Hola! ¿Qué tal? This is an ellipsis… and French «quotes».

Cleaned: Hola Qu tal This is an ellipsis and French quotesMethod 4: Using NLP Libraries (For Advanced Tasks)

Libraries like NLTK and spaCy are designed for natural language processing. They don't just "remove" punctuation; they tokenize text, which means they split it into words and punctuation, allowing you to easily filter out the punctuation tokens.

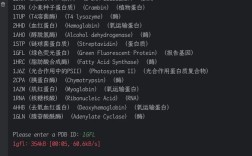

Example with NLTK

First, install NLTK:

pip install nltk

Then, download the necessary data:

import nltk

nltk.download('punkt')

Now you can use it:

import nltk

text = "Hello, World! This is a test."

# Tokenize the text into words and punctuation

tokens = nltk.word_tokenize(text)

# Filter out tokens that are not alphabetic (this removes punctuation and numbers)

filtered_words = [word for word in tokens if word.isalpha()]

print(f"Original: {text}")

print(f"Tokens: {tokens}")

print(f"Filtered: {filtered_words}")

Output:

Original: Hello, World! This is a test.

Tokens: ['Hello', ',', 'World', '!', 'This', 'is', 'a', 'test', '.']

Filtered: ['Hello', 'World', 'This', 'is', 'a', 'test']This approach is very powerful because it understands sentence structure and punctuation's role in it.

Which Method Should You Choose?

- For a quick and simple script: Use

string.punctuationwithstr.translate(). It's fast, easy, and requires no extra libraries. - For complex cleaning rules: Use Regular Expressions (

reorregex). It gives you the precision to keep or remove specific characters. - For serious NLP tasks: Use NLTK or spaCy. They provide context-aware tokenization and are the standard in the field.